Babbage1834.com

This site is dedicated to describing the computer and its history in the simplest and shortest way possible.

Each and every day we interact with thousands of computers but very few of us know what they are, which devices they are part of and especially how they came to be.

It is astonishing to see that it only took humanity two hundred years to create a brand new life form—one that is silicon based, ubiquitous and still in its infancy.

Just look around, it is obvious that computers surpass us in many ways. In one sense, they are diminishing the sharpness of our intellectual abilities, sometimes replacing them altogether, drawing us in an endless search for mindless facts.

And yet, if used properly, they could make our 21st century technological society the brightest star of them all.

So, why retell a story when there are already tons of books, documentaries, websites... dealing with the subject?

Well, the main reason is that they often fall short from the truth because of personal bias and/or limited research from their authors, or they just tell you too much, dumping anecdotal evidence all over. This has created a veil of confusion which has kept the simple, collective answers from rising to the top.

We have spent the past 15 years watching, reading and analyzing the contents of thousands of documents (lectures, interviews, books, magazines, videos...) and, after a thorough filtering, we have distilled our findings into a pure collection of fundamental facts.

The result, presented on this site, is by far the most concise and yet most complete framework of the history of computing to date. It comprises only the major milestones that marked the path taken by the main players in this world changing journey.

This newly minted computer history is presented in the following four chapters, each one can be read in 1 minute (headings), 10 minutes (more headings) or an hour (the whole enchilada).

Thus you can discover, understand or even become an expert in the field in 5 minutes, 50 minutes or just a few hours.

Unlike any other presentation, you decide on how long to spend on each subject, not us.

Leave when it gets too much. Come back often and discover something new every time.

Enjoy!

Computer History in Minutes

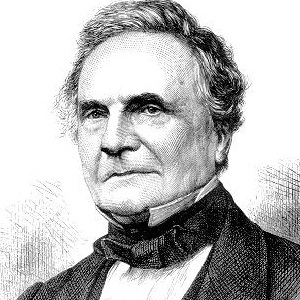

Charles Babbage: Designer of automatic mechanical calculators, inventor of the computer

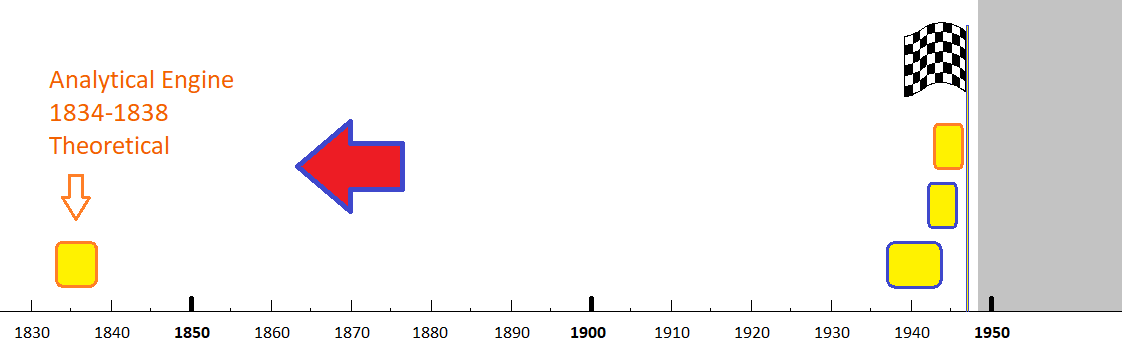

The first glimpse of an idea of a programmable digital calculator came to Victorian era British inventor Charles Babbage around November 1834.

After months—years—of brainstorming, researching, tinkering with his machine... by 1848 he finally settled on the design of what we now call a computer: its architecture, the concept of software and basically everything else.[1]

This extraordinary journey had started fifteen years earlier, in 1822, when Babbage decided to simplify the production of books of mathematical tables by creating a mechanical calculator that could do math and write its results automatically without human interaction.

He called his automatic mechanical calculator a Difference Engine because it used the clever method of differences which replaced a complicated mixture of multiplications, additions and subtractions by simple additions, when computing mathematical tables using polynomial approximations.[2]

Ten years later, with no end in sight, he had an epiphany and realized that he could design a brand new machine that could compute the values of any function derived from analytic geometry.

Analytic geometry had been invented two centuries earlier and was used to describe our world within a coordinate system. Its most striking achievement was to predict the motion of heavenly bodies (but to be more down to earth and practical, it also described how cannon balls flew.)

Babbage realized that for the first time in human history, a machine could be used to study the Universe!

If he could build his mechanical wonder, scientists' tedious manual calculations well into the night would no longer be needed. And of course human errors in mathematical tables, that sunk so many ships (literally and figuratively), would be a thing of the past.

Charles Babbage commenced work on the design of the Analytical Engine in 1834... and by 1838 most of the important concepts used in his later designs were established

...the method of differences supplied a general principle by which all Tables might be computed through limited intervals, by one uniform process. Again, the method of differences required the use of mechanism for Addition only.

Simon Schaffer introduces Babbage's engines on the 2018 BBC documentary Mechanical Monsters (6:19 to 11:28)

As previously mentioned, his Eureka moment happened around November 1834, after he came to the conclusion that numbers should not be his machine's business, but that instead, it should be dedicated to reading lists of instructions that, in turn, would make it work on numbers.

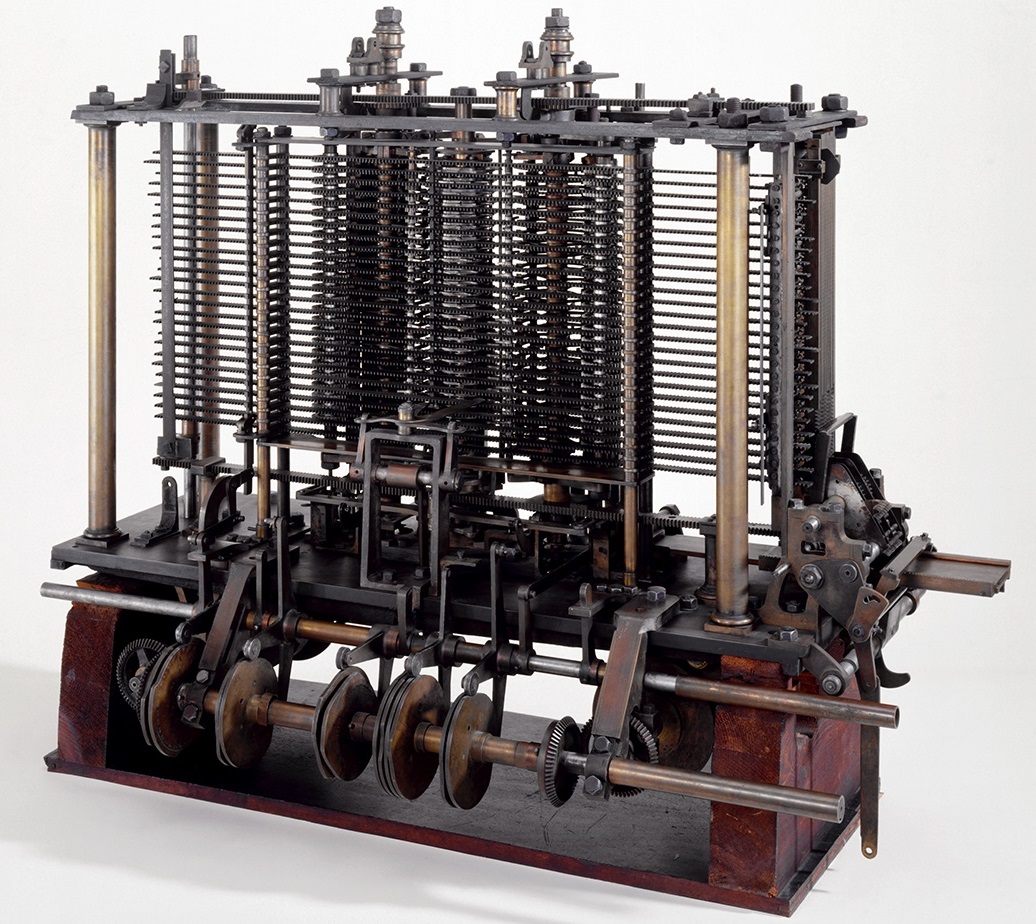

A very, very small part of what would have been Babbage's cogwheel computer, the Analytical Engine, that he built with his own money, during his lifetime - © info

His new machine would read lists that looked like:

What seemed to be an added level of complexity (read, do math) had, in fact, simplified his endeavor tremendously by separating two independant requirements:

His new machine would compute the various values of any mathematical equation by reading and executing the instructions contained in its list, one instruction at a time, sequentially.

To work on another equation would only require a change of list—a change of program—and nothing else.

Babbage named his program reader an Analytical Engine. Its descendants are our computers.

Nowadays, we call the part that never changes Hardware, the lists of instructions are called Software and when the software is stored in permanent memory it is called Firmware.

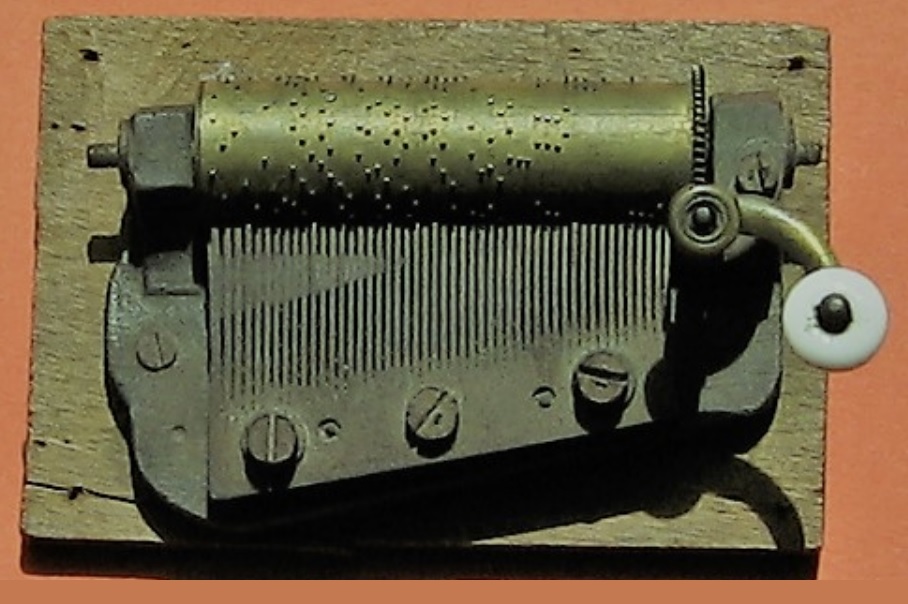

Music box cylinder. Each peg represents a note to be played. © info

Babbage needed a reliable mechanical memory to write his programs on, something that was simple, interchangeable and infallible.

His first choice was to go with drums like the ones found in music boxes of his time.

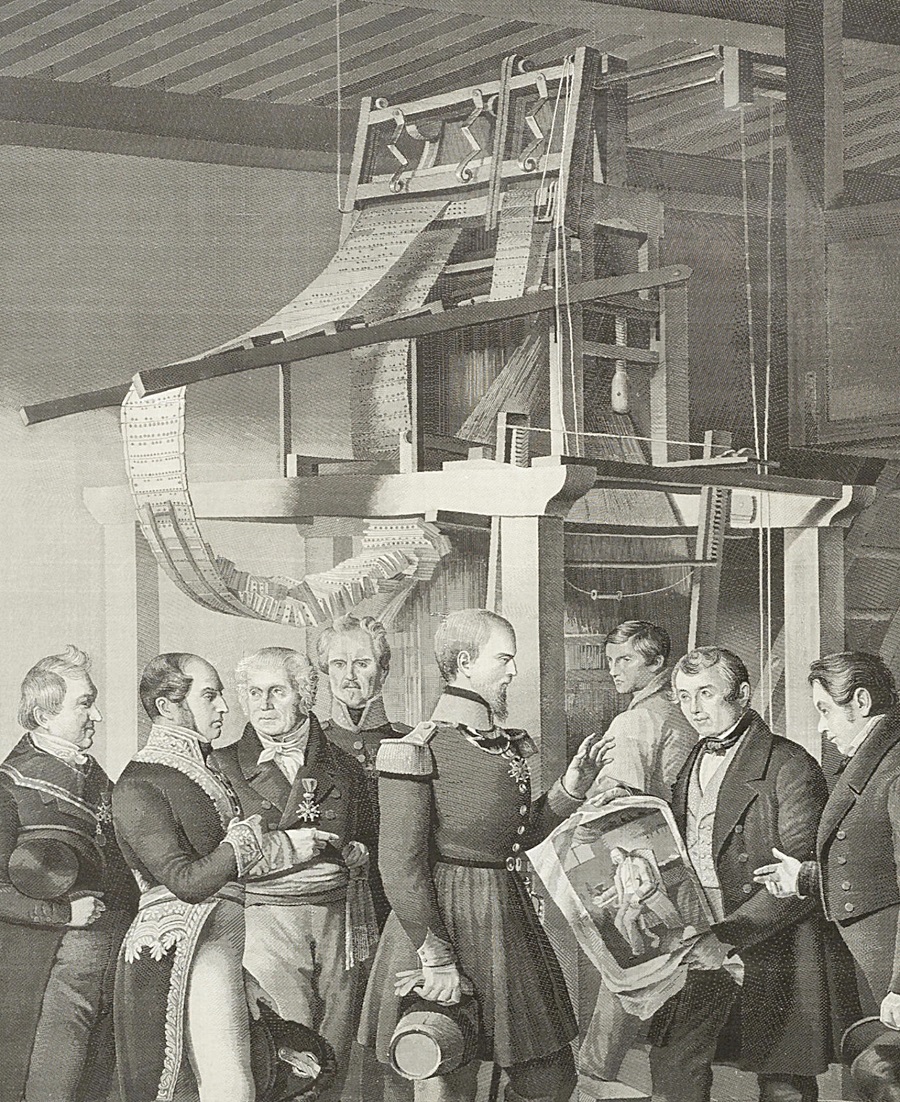

In 1836, he replaced them with punched cards from the textile industry:

The introduction of punched cards into the new engine was important not only as a more convenient form of control than the drums, or because programs could now be of unlimited extent... it was important also because it served to crystallize Babbage's feeling that he had invented something really new, something much more than a sophisticated calculating machine.

If Babbage had succeeded at building either, his automatic mechanical calculator (Difference Engine), or his cogwheel computer (Analytical Engine), we would live in a different world today.

Unfortunately, he set his goals so high, like memory size, precision... for the technology of his time and for the amount of money that he and the British government were willing to spend, that he was unable to build any substantial part of either of his engines during his lifetime.[1]

The British government only helped Charles Babbage financially for his first machine, the Difference Engine. Babbage alone paid for everything to support the design of his mechanical computer, the Analytical Engine

And yet, both of his machines got built eventually.

First, it was his cogwheel computer,

It cost in between $300,000 and $400,000 to build the ASCC. A sizable part of that came from the US Navy, from the beginning of 1942, which in return reserved the right to use the calculator until the end of the war.original text in french:

...la construction de l'ASCC avait côuté entre 300 000 et 400 000 dollars. Une bonne partie fut couverte par les fonds avancés par la Marine qui, dès le début de 1942, s'était réservé l'usage du calculateur pour la durée de la guerre.

Second, it was his Difference Engine,

It was not until 1848, when I had mastered the subject of the Analytical Engine, that I resolved on making a complete set of drawings of the Difference Engine No. 2. In this I proposed to take advantage of all the improvements and simplifications which years of unwearied study had produced for the Analytical Engine... I am willing to place at the disposal of Government... all these improvements which I have invented and have applied to the Analytical Engine. These comprise a complete series of drawings and explanatory notations, finished in 1849, of the Difference Engine No. 2,—an instrument of greater power and greater simplicity than that formerly commenced...

On Friday 29 November 1991 the engine performed its first full automatic error-free test calculation... The machine was faultless. We had done it. We had built the first Babbage engine, complete and working perfectly, twenty-seven days before Babbage's 200th birthday.

A Jacquard loom with punched card for data, successfully used in the textile industry since 1805.

For the first two years (1834-1836), Babbage wanted to use drums to store his instructions. A nice feature that came with a drum, was the automatic repetition of the program it contained, since it was re-run after each full cylinder rotation, going over the same list of instructions repeatedly until the machine was stopped (repetition was a fundamental characteristic of his Difference Engine).

Today, we call this feature a programming loop.

But punched cards could go on forever, so Babbage had just lost this automatic repetition feature.

His solution was to implement a programming loop in software, which was accomplished by creating an instruction telling the computer to physically move the stack of cards back to a previous location, and then to resume its sequential work from there (rewinding the cards back to the top if you want to simulate a drum).

And not to leave a brilliant idea alone, he also created an instruction of conditional branching. Conditional branching is used to break out of a programming loop, or to just go to a different place in the program once a specific condition had been met. His machine would do it by physically moving the stack of cards backward or forward by a certain number of cards before resuming its work.

In just four years, by 1838, Babbage had invented all there was to invent.

At this point his Analytical Engine,

could be programmed by the use of punched cards. It had a separate memory and central processor. It was capable of looping... as well as conditional branching... It also featured... graph plotters, printers...card readers and punches. In short, Babbage had designed what we would now call a general-purpose digital computing engine.

Even though his computer was purely theoretical, Babbage still wrote some software for it, first to introduce the concept of programming, but also to demonstrate his machine's unlimited power and flexibility.[1]

No finite machine can include infinity... but it is possible to construct finite machinery, and to use it through unlimited time. It is this substitution of the infinity of time for the infinity of space which I have made use of, to limit the size of the engine and yet to retain its unlimited power.

In the period 1836 to 1840 Babbage devised about 50 user level programs for the Analytical Engine. Many of these were relatively simple... others allow[ed for] solution of simultaneous equations.

Ada Lovelace, first programmer

With no interest at home, Babbage accepted an invitation in 1840 by the king of Sardinia, Charles Albert, to come to his capital city Turin, so his subject could learn about his revolutionary machine. It would be the only public lecture that he would ever give about his cogwheel computer.[1]

Shortly after his presentation, General Menabrea wrote an article in French about what he had seen.

Two years later, Ada Lovelace, a friend of Babbage's and the only person that really understood the Analytical Engine, published her translation of this article, that she called Sketch of The Analytical Engine.

What makes this translation famous is that Lady Lovelace had added a lot of notes to better explain what Menabrea was talking about, in fact her notes were longer than the original article.

Menabrea had showed in his article a few lists of instructions to demonstrate the simplicity provided by the use of software which were copied verbatim from Babbage's exposé (kind of the first published software?).

But one of Lovelace's notes described an algorithm, written by Babbage, to compute and print successive numbers of Bernoulli which would turn out to be the only program that used a true algorithm (a software loop) published in the 19th century.

One must realize that, with the inability to build Babbage's machine, it took more than a hundred years for the next piece of software to be written and then published and it was in the 1940s.

In his memoires, Babbage tells us that, about the program

relating to the numbers of Bernoulli, which I [Babbage] had offered to do to save Lady Lovelace the trouble. This she sent back to me for an amendment, having detected a grave mistake which I had made in the process.

Lady Lovelace corrected the master!

This makes her the first independent programmer, the first programmer, really.

Babbage dedicated his autobiography to King Victor Emmanuel II, Albert's son, he writes:

In 1840, the King, Charles Albert, invited the learned of Italy to assemble in his capital. At the request of her most gifted Analyst, I brought with me the drawings and explanations of the Analytical Engine. These were thoroughly examined and their truth acknowledged by Italy's choicest sons.

To the King, your father, I am indebted for the first public and official acknowledgment of this invention.

I am happy in thus expressing my deep sense of that obligation to his son, the Sovereign of united Italy, the country of Archimedes and of Galileo.

As we will see later down this page, the computers that surround us today are all electronic copycats of Babbage's design.[1]

In a Babbage machine the architecture is composed of:

input, output, processing unit and memory. The only difference found over the years is on the input side because of the way the computer is seen and used.

Babbage wanted to use his Analytical Engine like a library routine to which you pass many different parameters, so it had a card reader for the program, one for the numbers, one for the variables... If you wanted a different set of values for a new mathematical table, you would just change the 'number' stack of cards, reset the other stacks and restart the machine...

Aiken also wanted to print books of mathematical tables, so he decided to use two readers, one for program, one for data, to make various tables for the same equation (Harvard architecture).

Eckert & Mauchly wanted simplicity and wanted everything read in memory before a run for optimum speed, which meant that everything had to be together (Stored-program architecture), but to a programmer, all of these Babbage machines have the same architecture (Babbage architecture).

Babbage complete defines any machine that is animated by the sequential reading of instructions (software) which make it perform mathematical operations on the numbers that surround it (provided by memories and peripherals).

The machine must also implement two fundamental concepts: Software loops, where the same group of instructions can be executed over and over again, and Branching, where the machine is told to go to a different piece of code, either to break out of a loop or to just execute a different part of the program.

Babbage complete is another way to define whether the machine you're looking at is a computer or not. If it is a computer, it is Babbage complete, it's that simple.

So, early TVs (before they were equipped with microprocessors), these extremely sophisticated electronic machines, were NOT babbage complete because they didn't do their jobs by reading instructions that made them process the data transmitted over the airwave. An early generation TV wouldn't know what to do with a program.

A Babbage Machine is any machine that is Babbage Complete, a computer, really.

|

Note:

You will find the erroneous expressions Turing complete and Turing machine sprinkled everywhere in the computer literature where they are used to specify whether a machine is a true computer or not, as defined by Turing.[2,3] The only problem is that Babbage had absolutely, completely, totally defined and documented the computer, its architecture and the basic building blocks of software that completed it more than seventy years before Alan Turing was even born. Alan Turing has nothing to do with the definition, the invention nor the creation of the computer and its industry. Zero, zilch, nada! Babbage Complete or Babbage Machineare the expressions that should be used to define whether a machine is a computer or not. |

Türing machine, which is used by some uninformed story tellers to define whether a machine is a computer or not, comes from an article that Alan Turing wrote in 1936, in German (hence the umlaut on his name in the first few decades), about an impossible machine of infinite size, that behaved kinda like a computer... Impossible, infinite, kinda... and yet these uninformed story tellers tell us that Turing would have invented the computer then, a hundred years after Babbage, one year before IBM started to do research on Babbage's work (1937), that would end up in their building his computer (built 1939-1343, delivered 1944).

The only original work from Turing in the computer field is the Turing test, trying to determine if you're talking to a computer or a human being which these days is big in science fiction writings.

GLOSSARY

Türing machine. In 1936 Dr. Turing wrote a paper on the design and limitations of computing machines. For this reason they are sometimes known by his name. The umlaut is an unearned and undesirable addition, due, presumably, to an impression that anything so incomprehensible must be Teutonic.

Anyone who taps on a keyboard, stares at a Smartphone or plays a video game, is using an incarnation of Babbage's Analytical Engine

Babbage used the design flow of a factory for the architecture of his machine, dividing it in between:

| Analytical Engine | Factory |

|---|---|

| Input | Receiving |

| Output | Shipping |

| Processing | Manufacturing |

| Memory | Storage |

And since he used Jacquard cards from the textile industry as mechanical memories to store his programs, he used terms from that industry to describe the architecture of his machine, with mill for its processing unit and store for its memory.

Babbage's inspiration came to him after having spent ten years (1822-1832) visiting factories all over Europe to familiarize himself with the state of the art of manufacturing, hoping to gain knowledge that would help him optimize the design of his Difference Engine.

As a newly minted expert on the subject, he wrote the book On the Economy of Machinery and Manufactures in 1832.

Two years later, with a fresh definition of the design flow of a factory still in mind, he came up with his calculating factory design: the Analytical Engine.

This factory architecture as exemplified on the first computer ever—IBM's analytical engine, the ASCC—was copied as the base for all the early modern electronic computers (Moore School type), before parallel architecture machines took hold, and yet they still use his architecture for the atoms of their design: the single core microprocessor as well as multi-core microprocessors where each core is a Babbage machine.

Babbage was inspired by the work of Gaspard de Prony on mathematical tables in the design of both of his automatic mechanical calculators.

First it was de Prony's use of the method of difference to compute mathematical tables which Babbage automated with his Difference Engine and second it was his division of labor which he used to design his cogwheel computer.

De Prony, a nobleman who had escaped the guillotine during the French Revolution because of the recognized value of his scientific knowledge,

...had been commissioned during the Republic to prepare a set of logarithmic and trigonometric tables to celebrate the new metric system. These tables were to leave nothing to be desired in precision and were to form the most monumental work of calculation ever carried out or even conceived... With customary methods of calculation... there simply were not enough computers—that is to say people carrying out calculations—to complete the work in a lifetime.

De Prony was stuck in a dead end; fortunately he soon discovered the division of labor championed by Adam Smith in his book on the Wealth of Nations and decided to apply it to achieve the impossible.[1]

Mr. de Prony took on the task... to execute... the broadest and most imposing monument of computation ever done or even conceived... Quickly Mr. de Prony realized that, even if he partnered with three or four clever cooperators, most of his expected life span wouldn't be enough to achieve his goal. He was preoccupied with this negative thought when, as he was standing in front of a bookstore street display, he noticed the 1776 British book of Smith, from London; he opened it at random and found in the first chapter, which deals with division of labor, an example using the fabrication of needles. After browsing through a few pages, he was inspired and realized that he could speedup his task by manufacturing his logarithm tables the way that needles were made.Babbage kept this part in french:

M. de Prony s'était engagé... à composer... le monument de calcul le plus vaste et le plus imposant qui eût jamais été exécuté, ou même conçu... Il fut aisé à M. de Prony de s'assurer que même en s'associant trois ou quatre habiles cooperateurs la plus grande durée presumable de sa vie, ne lui sufirai pas pour remplir ses engagements. Il était occupé de cette fâcheuse pensée lorsque, se trouvant devant la boutique d'un marchand de livres, il apperçut la belle édition Anglaise de Smith, donnée a Londres en 1776; il ouvrit le livre au hazard, et tomba sur le premier chapitre, qui traite de la division du travail, et où la fabrication des épingles est citée pour exemple. A peine avait-il parcouru les premières pages, que, par une espèce d'inspiration, il conçut l'expédient de mettre ses logarithmes en manufacture comme les épingles.

And so, de Prony divided the work at hand in between three distinct group of people.

Babbage decided to use the same division of labor but to replace the third group by his mechanical computer.

| Gaspard de Prony | Babbage |

|---|---|

| A FEW Brilliant Mathematicians Decide on plan & formulas |

A FEW Brilliant Mathematicians Decide on plan & formulas |

| A FEW Mathematicians Come up with key computations |

A FEW Programmers Come up with algorithms and code |

| A LOT Basic Human Computer Only perform additions and subtractions |

A LOT OF TIME Mechanical Computer Executes simple math under control of a program |

To understand the scope of the human and financial effort required to build either of Babbage's machines, we can use the success story of the arithmometer.

The release of the arithmometer marks the starting point of the mechanical calculator industry because it was the first four function machine to achieve the minimum level of strength and reliability required for a daily use in an office environment.

For this reason it was the first to be manufactured in quantity with the first 100, the first 1,000 and with its licensees the first 10,000 four functions mechanical calculating machines ever produced.

It was patented in 1820 by Thomas de Colmar, a self-made millionaire, at about the same time that Babbage went into the business himself, but it was only commercialized from 1851.

That is thirty-one years in total; yes, it took thirty-one years for Thomas to ready his arithmometer for manufacturing.

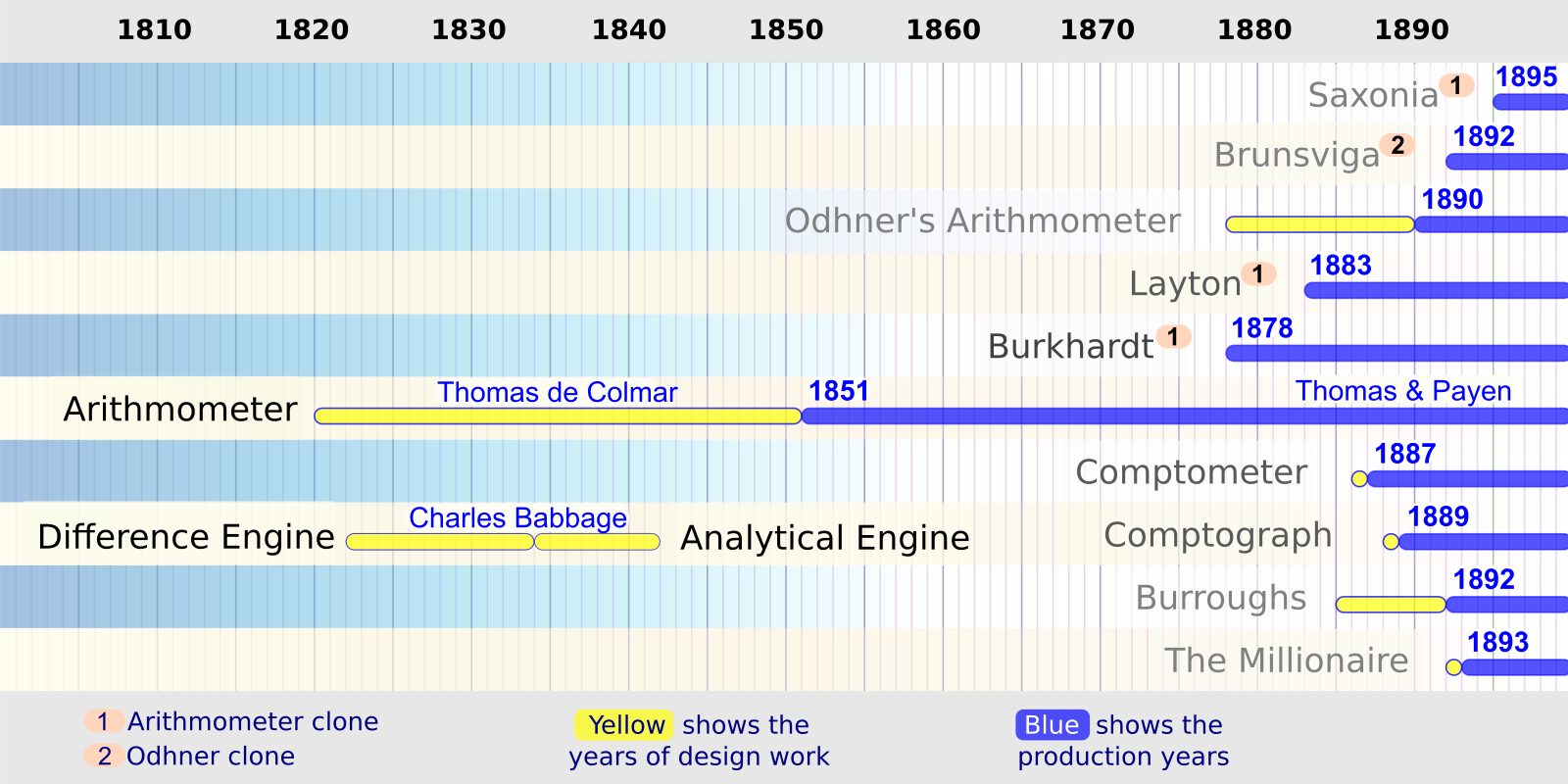

First Mechanical Calculators in production

It was not an easy task. First he had to simplify and adapt its design for the very primitive manufacturing technics of the time, but he also had to make it sturdy enough to sustain the beating it could take from constant office use.

Money, and making enough of it to support its development was also crucial. In fact this entire exercise cost Thomas twice as much as what he paid in 1850 for Chateau Maisons-Laffitte,[1,2] a castle once owned by the future King of France Charles X.

Thomas spent thirty years of his life and more than 300,000 francs to develop his admirable caculating machineoriginal text in french:

L'inventeur a dépensé trente ans de sa vie et plus de trois cent mille francs pour arriver à construire son admirable machine

...he bought the beautiful castle Maisons-Laffitte for just 150,000 francs cashoriginal text in french:

...il acheta pour la modique somme de 150 000 francs comptant, le jolie château de Maisons-Laffitte

And yet the arithmometer was many orders of magnitude simpler than either of Babbage's Engines.

The arithmometer, the 1st commercialized mechanical calculator - © Sarah Robinson 2012

Chateau Maisons-Laffitte was half the cost (check the man in the circle for scale) - © info

Thomas de Colmar's determination and massive investment launched the mechanical calculator industry and paid off tremendously.

Not only his arithmometer was the only type of four function mechanical calculator available for one third (1850-1887) of the one hundred and twenty years that this industry lasted (1850-1970), but with the help of international manufacturing licensees (mostly British and German),[1] the arithmometer dominated the industry for half of this time (1850-1910).

...Thomas' machines were also exported, with 60% going abroad; it is said that 1500 machines were manufactured before 1878. Some manufacturing licenses were granted to foreign manufacturing companies, Tate based in London, UK (the basic model had 16 numbers), Burkhard[t] based in Glashütte, Saxony.original text in french:

...les machines de Thomas étaient même exportées, à 60%, à l'étranger ; 1500 exemplaires furent, paraît-il, commercialisés avant 1878. Des licenses de production furent accordées à des sociétés étrangères, Tate à Londres (le modèle de base était à 16 chiffres), Burkhard[t] à Glasshütte en Saxe.

-wiki.jpg)

Babbage and Britain spent the equivalent of 40 of those and then Britain gave up

By contrast, the British government and Charles Babbage each spent the equivalent of 20 early Robert Stephenson locomotive engines to finance the development of his first project, the Difference Engine.

Considering the task at end, it was a non-existent or at least flimsy investment, especially when you realize that they should have invested at a value of the Palace of Versailles level.

And it was only for Babbage's first engine because the British government refused to contribute any money to the development of his cogwheel computer, the Analytical Engine.

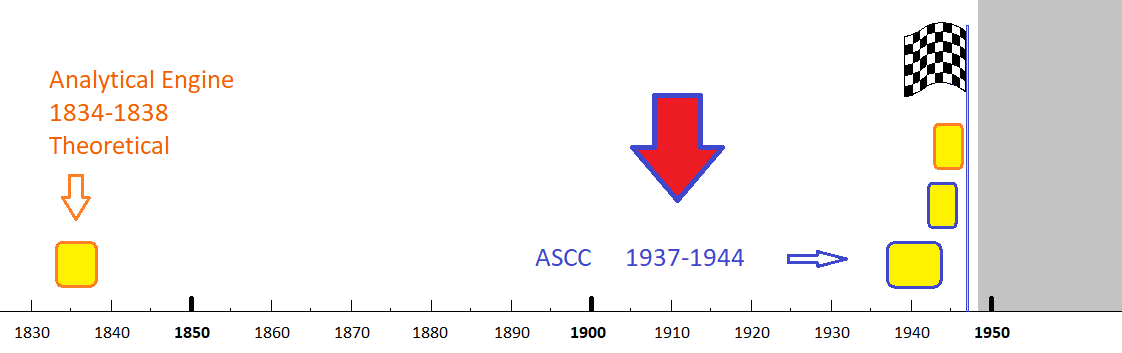

IBM was first to succeed at building Babbage's Analytical Engine (finished in 1943, delivered 1944). Its design was open‑sourced shortly after the end of the Second World War in a 560-page manual.

In his review of this document for the magazine Nature titled Babbage's dream comes true, L. J. Comrie, a pioneer in mechanical computation, lashed out at the British government of Babbage's time:

The black mark earned by the government of the day more than a hundred years ago for its failure to see Charles Babbage's Difference Engine brought to a successful conclusion has still to be wiped out. It is not too much to say that it cost Britain the leading place in the art of mechanical computing.

IBM built Babbage's machine but it happened a hundred years later[1] thanks to:

The machine now described, "The Automatic Sequence Controlled Calculator", is a realization of Babbage's project in principle, although its physical form has the benefit of twentieth century engineering and mass-production methods.

It cost in between $300,000 and $400,000 to build the ASCC. A sizable part of that came from the US Navy...original text in french:

...la construction de l'ASCC avait côuté entre 300 000 et 400 000 dollars. Une bonne partie fut couverte par les fonds avancés par la Marine...

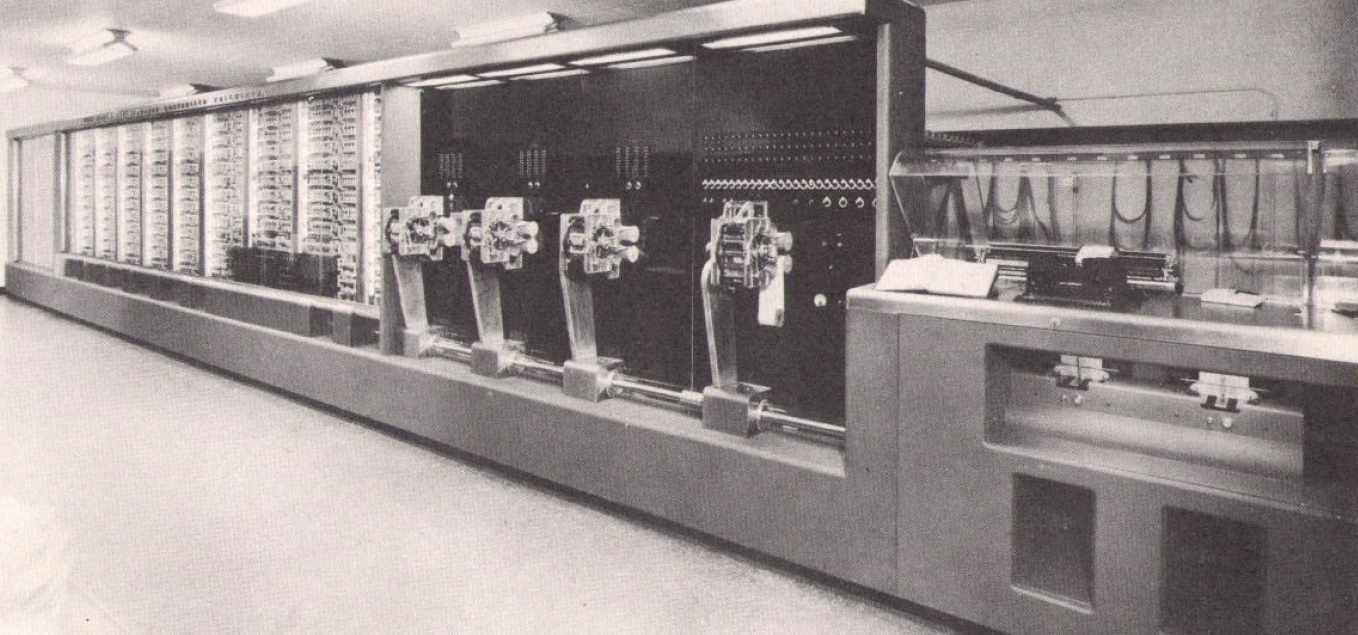

The ASCC, IBM's Analytical Engine. If finished, Babbage's machine would also have had a similar size

...in the subject of automatic digital computing machines... perhaps the greatest individual contributing factor was that a team... actually brought Babbage's dream of an automatic sequenced digital calculator into realization. It is to the great credit of Dr. Howard Aiken of Harvard University and the International Business Machines Corporation to have constructed the first completely automatic general-purpose digital computing machine (1944).

IBM worked on the project from 1937 to 1943 and delivered it to Harvard in early 1944. Its engineers replaced Babbage's stacks of cogwheels by more reliable electromechanical parts. Initially called ASCC, Howard Aiken renamed it Harvard Mark I a few years later.

The ASCC was an engineering wonder of its time. It was:

51 feet long and 8 feet high... [There were] 500 miles of wire, 3,000,000 wire connections...

Five hundred miles is the distance from San Francisco to San Diego. This wire length was made of 1.5 million individual pieces that were connected in 3 million places... the complexity is mind boggling!

The Trinity nuclear explosion refined by calculations on the ASCC

As soon as it became operational at Harvard, around July 1944, the US Navy drafted the ASCC into the war effort where it was used 24/7, in secret, to support the design of atomic weapons, starting with the design of implosion for the first detonation of a nuclear weapon ("the gadget", plutonium, 22 Kilotons of TNT, trinity test, July 1945). Some other critical calculations included the determination of critical mass...

John Von Neumann was the Manhattan Project team leader responsible for the first programs that ran on the ASCC. This project introduced him to the purity of the architecture of a Babbage machine.

The ASCC turned out to be the only programmable digital computer operational and in use during WWII. It was used at Harvard 24/7 for fifteen years until it was disassembled in 1959.

20 programmable calculators under the control of a master program brought computer science into the electronic age.

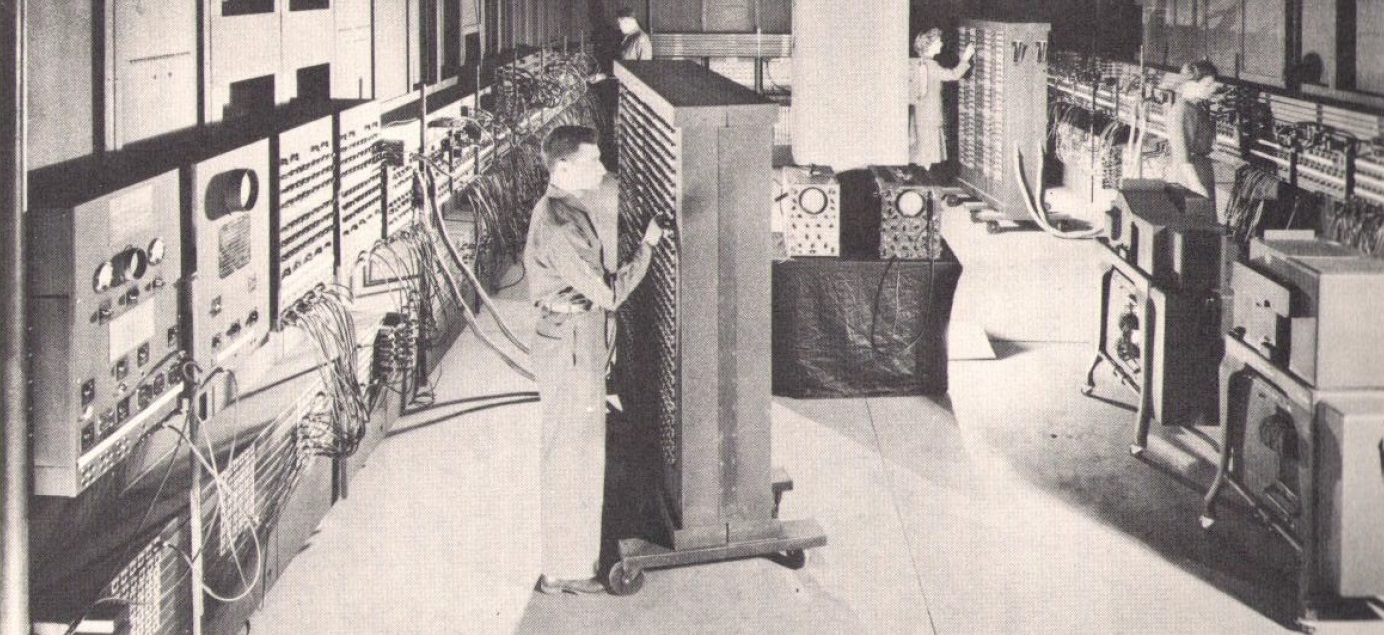

This second computer ever was a complicated kluge[1] compared with the architectural purity of Babbage's Analytical Engine, but it was all-electronic, and it was faster than anybody could have imagined.

This term is used extensively in the electronic industry: a kluge is a quick-and-dirty solution that is clumsy, inelegant, inefficient...but that can be made in the shortest possible time, usually as a proof of concept.

The first Electronic computer. Twenty individual programmable electronic calculators under the control of one master program

Paid for by the US Army, it was developed from 1943 at the Moore School of Electrical Engineering of the University of Pennsylvania (UPenn).

J. Presper Eckert and John Mauchly designed it by combining theelectronic calculator work of John Atanasoff of Iowa State University with theprogramming by wire found on IBM tabulators since 1902.[2]

They called their machine ENIAC.

ENIAC wasn't the electronic progeny of... Babbage's Analytical Engine... At its core, it was a bunch of pascalines wired together. But ENIAC had intelligence, it was programmable. And for the first time ever, intelligence was all-electronic.

Pressed for time, and hoping to use ENIAC before the end of World War II, the army refused any enhancements to the plans that they had agreed upon.

Instead, they paid for another project, purely theoretical, which would be a collection of all the new and improved ideas that had arisen since they started the project.

The first H-Bomb refined by calculations on the ENIAC

This definition of a second electronic computer which they called EDVAC, ran concurrently with the building of ENIAC from early 1944. It became the theoretical blueprint of the modern computer which is commonly referred to as stored-program computer.

An important crossover from the IBM/Babbage ASCC came from John Von Neumann who knew both the ASCC and ENIAC very well. Eckert and Mauchly ended up using the purity of Babbage's ASCC architecture for theoretical EDVAC, their stored-program computer.

As soon as the ENIAC became operational at the end of 1945, early 1946, the US Army turned it over to the Manhattan Project to do top secret work on the H-bomb.[1]

In the early spring of 1946, Dr. Bradbury of the Los Alamos Labs wrote:

The calculations which have been performed on the ENIAC as well as those now being performed are of great value to us... The complexity of these problems is so great that it would have been almost impossible to arrive at any solution without the aid of the ENIAC. We are extremely fortunate in having had the use of the ENIAC for these exacting calculations... It is clear that physics as well as other sciences will profit greatly from the development of such machines as the ENIAC.

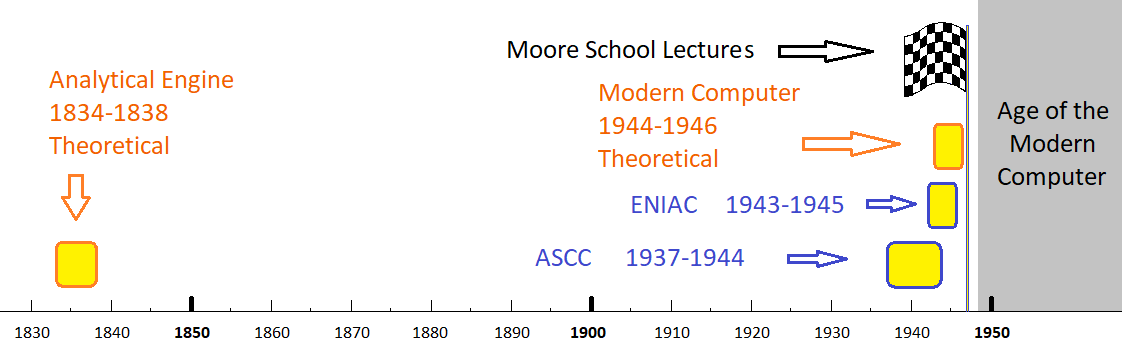

Everything was open-sourced at the end of the Second World War.

Eckert and Mauchly finished ENIAC and theoretical EDVAC, their two UPenn projects, a few months after the Allied Powers' victory of September 1945. They were both declassified in early 1946 at about the same time as the IBM ASCC computer.

The entire US Armed Forces had basically paid for everything up to this point[1] and so they decided to share—to open-source—everything they knew about programmable digital computers with the world[2] by organizing the Moore School Lectures in the summer of 1946, where they invited electronic specialists from the US and the UK to UPenn for a set of 48 day long classes.

I always think of the Moore School course as an outstanding example of the sharing of technological information, perhaps the most generous that has ever taken place

IBM paid for about 25% of the cost of building the ASCC and the US Navy paid for the rest. The US Army paid for the two UPenn projects (ENIAC, theoretical EDVAC) in full. The US Air Force didn't exist yet.

There were two students from England, one from Cambridge University and one from Manchester University. Both these universities would have stored-program computers running within a few years (Manchester Baby & Mark I and Cambridge EDSAC)

Of course, these lectures introduced the IBM ASCC and the ENIAC, which were the only two working programmable digital computers in existence at the time[3] but the purpose of these classes was to describe EDVAC, their theoretical stored-program computer, which became the starting point—the de facto standard—for the modern computer.

AT&T's Bell Labs got their first PROGRAMMABLE computer, the model V (B. Randell, The origins of Digital computers: selected papers, page 239) working at Aberdeen in July 1946 (50 Years of Army Computing: From ENIAC to MSRC, page 23) just as the lectures started.

|

Note: The ABC and the Colossus, two sophisticated electronic machines of the 1940s, are often mistaken for being the first electronic PROGRAMMABLE digital computer. And yet, neither of them are computers, since they are not dedicated to reading lists of instructions that make them work on numbers. They can only do one thing and one thing only. They are not under the control of a program. The Colossus, designed to decrypt German messages, was like a TV, a complex piece of electronics which turns serial streams of unintelligible numbers into a human-readable format with the help of knobs and dials. Next time someone tells you that they were computers, ask them to show you a program, any program, that would have run on these machines. Demand to see their respective instruction sets. Ask them to write and run the Bernoulli numbers program that Babbage & Lady Lovelace devised a century before. Don't feel bad if you thought that they were, you're not alone, a judge declared the ABC to be the first electronic programmable digital computer in 1973 invalidating Eckert & Mauchly's ENIAC patents in the process! The ABC and the Colossus are not Babbage Complete |

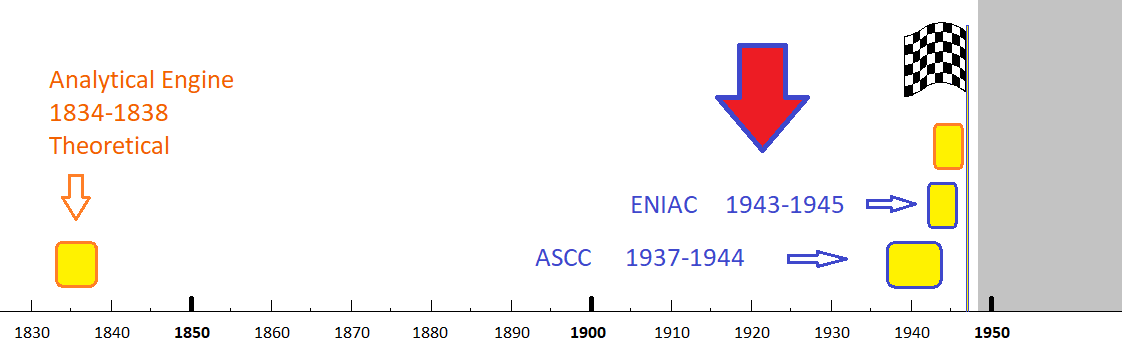

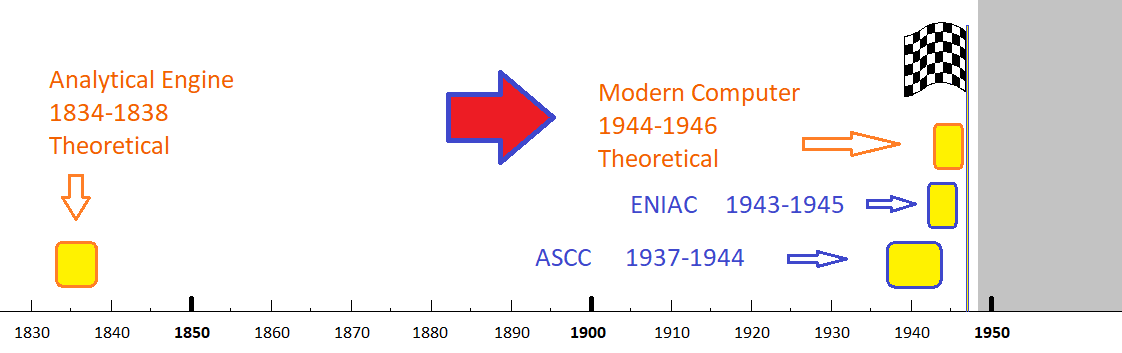

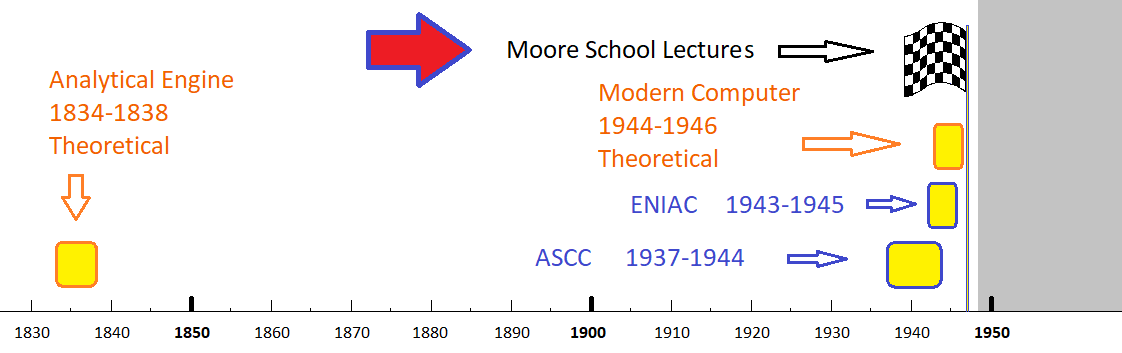

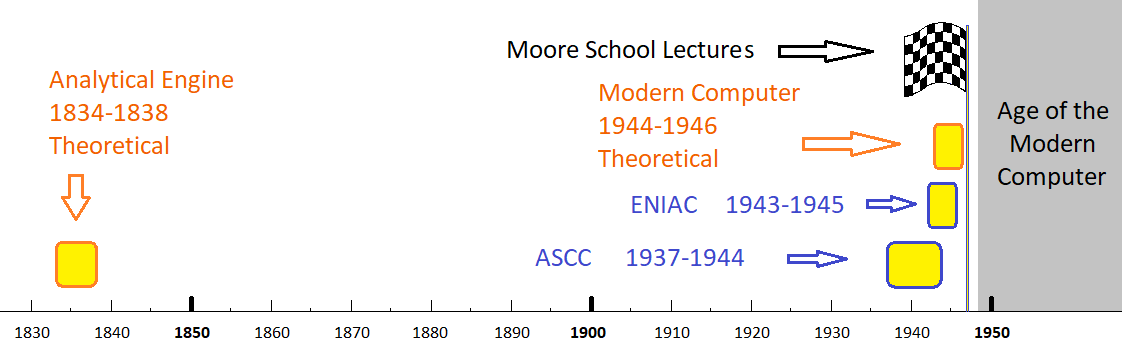

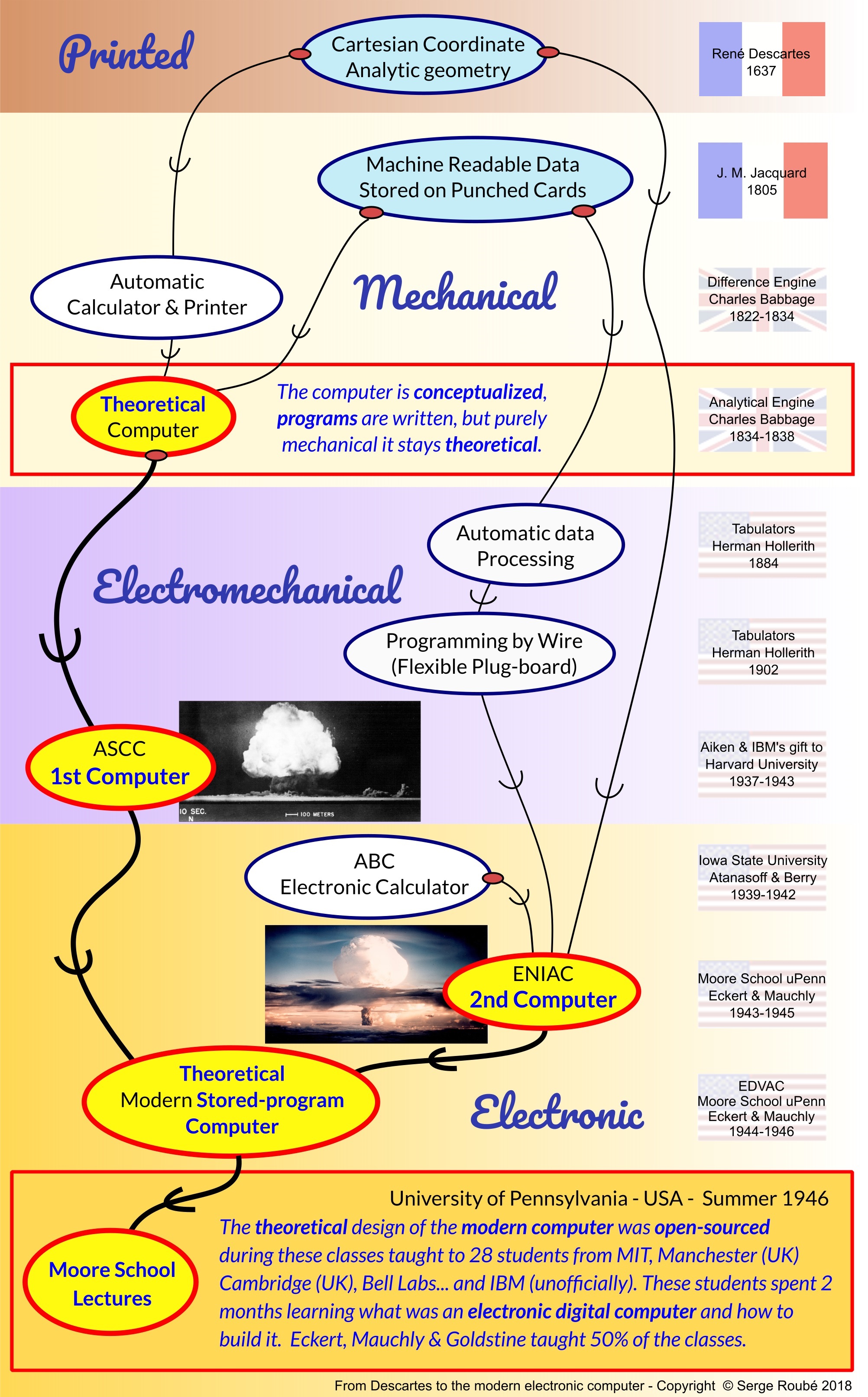

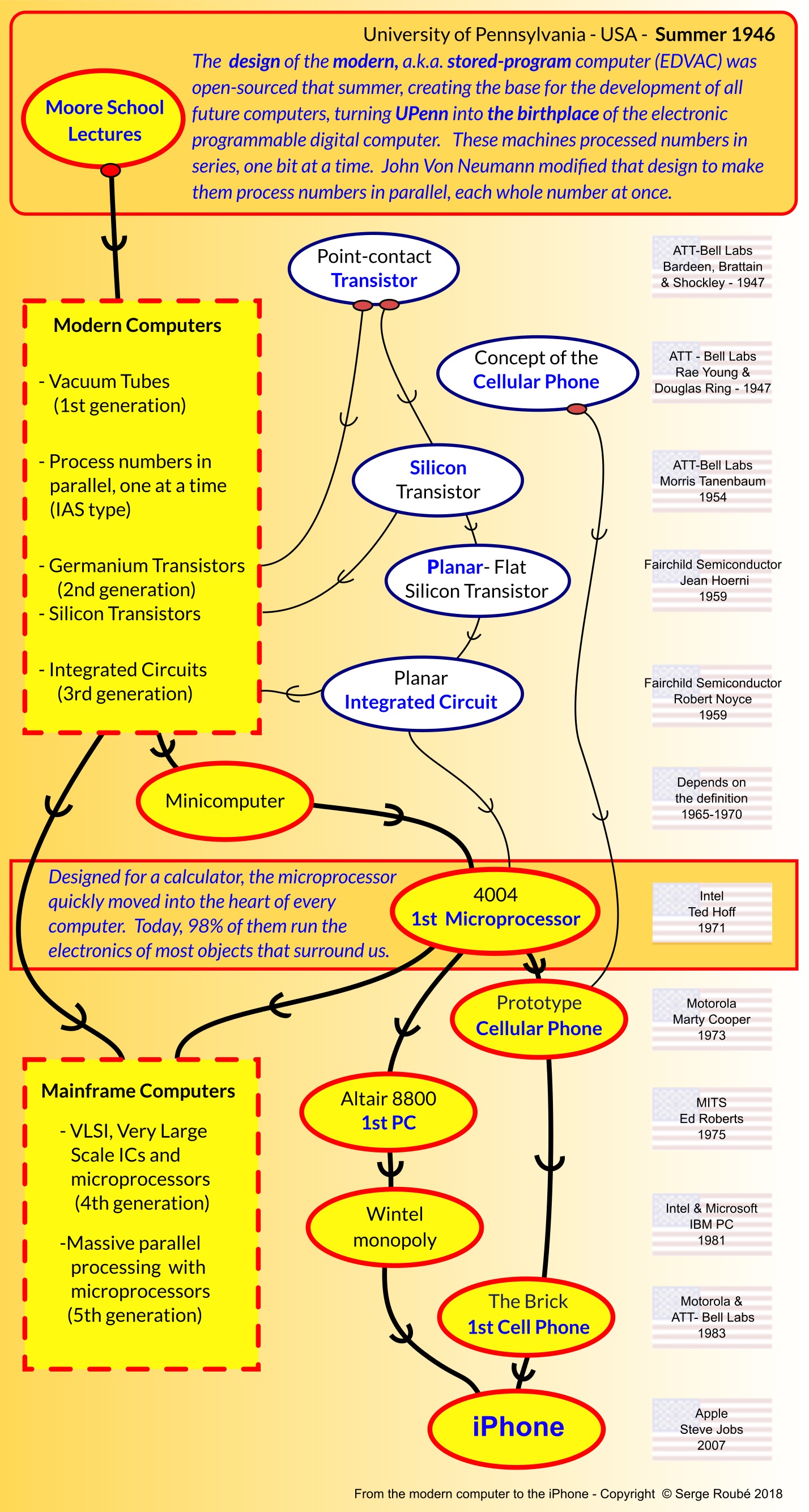

Here is a quick visual recapitulation of what happened up to this point:

It is hard to believe that the modern computer was gifted to the world just 72 years ago; more than half of the American citizens born the same year, in 1946, are still alive today, and yet, in that short amount of time, these stored-program computers have revolutionized everything they touched.

Their evolution happened at a stunning speed, going from slow, room-size, multi-ton machines, to computer systems weighing less than two ounces, like the ones fitted on Google glasses.

If cars developed at the pace of computers, a 2017 Ford Mustang ecoboost would have:

- 660 million Horsepower

- get from 0-60 in 3.4 milliseconds

- get about 3.7 million miles per gallon

- and cost $4,500

What is even more striking, is the profound effect that they are having on all aspects of our society from the way we work, interact, wage war and govern.

A SAGE console. First Screen/pointer user interface (notice the light gun on the table) - © info

(This part is a bit technical, skip to the next one if it becomes boring)

The stored-program computer described during the Moore School Lectures had almost the same architecture as the Babbage/IBM ASCC, but it had a slight disadvantage, it processed words in serial, one bit at a time instead of processing them in parallel, one word at a time (if you have a 20 bit word, you're wasting an awful amount of time).

John Von Neumann, whose Los Alamos team had used both the ASCC at Harvard (fission) and the ENIAC at UPenn (fusion) for the Manhattan Project, and who had participated in the second half of the design of the modern computer, decided to start a new branch of stored-program computers that would process words in parallel, like what he had seen done with the ASCC.

He developed it at the Institute for Advanced Study (IAS) in New Jersey. It would eventually win over and become the standard for stored-program computers.

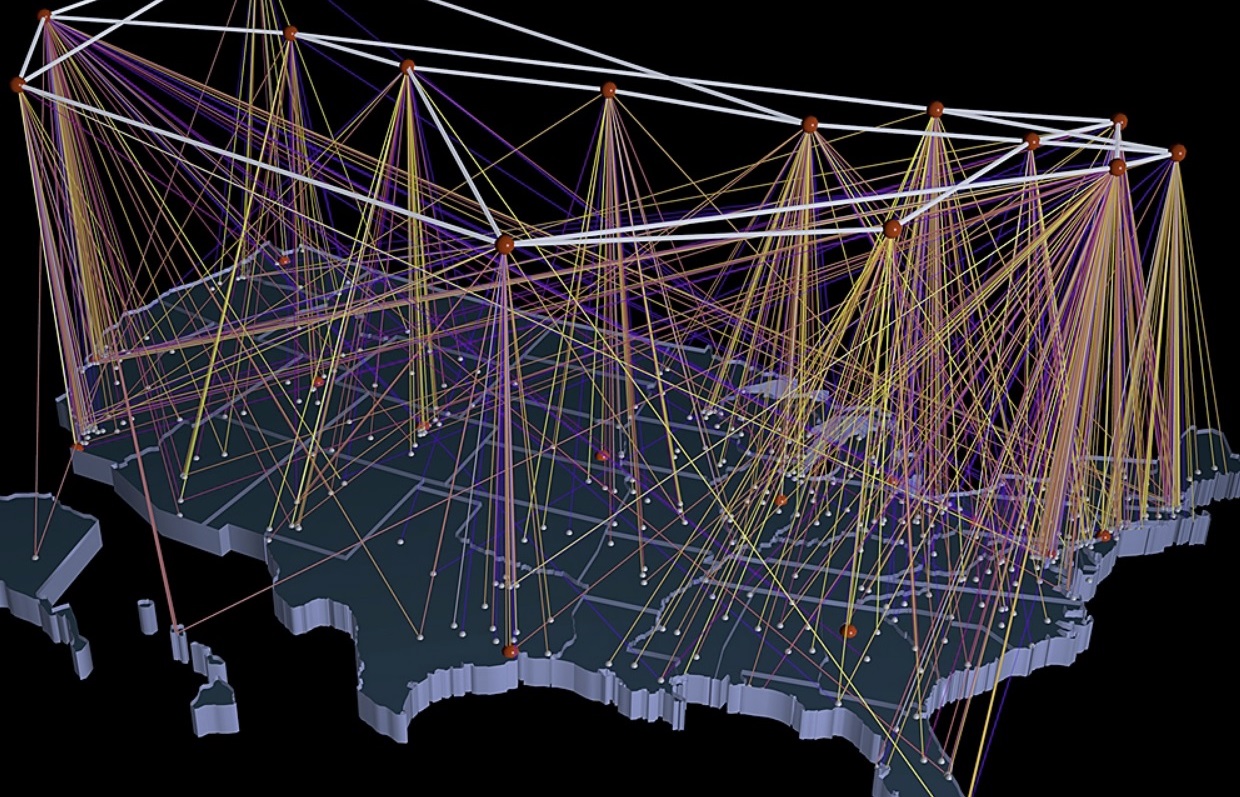

The National Science Foundation "family tree" of computers (picture taken from this website).

This is important because one of the attendees of the Moore School Lectures was Jay Forrester from MIT, who would go on to develop Whirlwind I, the first true modern computer. Its design was the base for so many firsts: the first real-time (instant processing of radar data to defend against enemy aircrafts),[1] the first time-sharing (so you can have more than one user at a time) and the first one to use magnetic core memories that were to become standard memory for the next 20 years.

But Whirlwind I was also one of the very first IAS type computers, which means that it had a Von Neumann architecture, well, I mean, it processed words in parallel as championed by Von Neumann which means that it had a full Babbage architecture

A young US Air Force (they had become a separate branch of the US Armed Forces in 1947) would inherit the Whirlwind I design from the US Navy and have IBM evolve it to build SAGE, our air defense system with 54 (27 pairs) of those.[2]

IBM (with the help of AT&T) connected them with the first wide area network.

They also turned it into the first systems that had operators interacting with the machine with display consoles coupled with pointing devices (light guns).

Sage would provide 80% of IBM's revenues for computers from 1952-1955.[3]

On April 20, 1951... for the first time, radar data on an approaching 'enemy' aircraft had been sent over telephone lines and entered into an electronic digital computer, which had almost instantaneously calculated and posted instructions directing the pilot of a 'defending' aircraft to his target

In total, 23 direction centers, three combat centers, and one programming center were built. Because each center was duplexed, there were 54 CPUs in all

The British were off to a strong start with three separate Moore School type computers working by 1950.

Soon after, they developed LEO, the first computer system dedicated to running a business, which was based on EDSAC, Cambridge University's first Moore School computer. LEO was named after the J. Lyons and Co. company, which had participated in EDSAC's financing.

By the mid-1950s, teams all over the world were designing their own Modern computers.

By the late 1950s, though, whatever lead the Europeans had was lost to American companies

After the 1950s, most development outside the United States would either disappear or fill some exceedingly smaller and smaller niches.

The US discovers the computer on TV on election night 1952

Let's go back to Eckert and Mauchly, in less than ten years,

At this point Eckert and Mauchly were leading the computer industry, the sky was the limit.

There was just one problem, they sold their first UNIVAC to the US Census bureau which had been Herman Hollerith's first customer back in 1890 (The merger of Hollerith's company and two others created IBM in 1911, it was therefore one of their deepest root).[1] Herman Hollerith had single-handedly created the field of automatic data processing in 1884, with electromechanical machines that read stacks of data stored on punched cards.

The Hollerith Company invented automatic data processing on punched cards which were first used nationally for the 1890 census. They were later part of the three company merger that created C-T-R (1911) which was later renamed IBM (1924)

IBM feared that their core business centered around these machines, could be completely annihilated by these stored-program computers.

Cornered, threatened and with new leadership (Tom Watson Jr. had just replaced Tom Watson Sr.) IBM responded with an all-out assault[2] which resulted in their dominating the entire computer industry before the 1950s were over.

Tom Watson jr. recalled:

In the early 50s... Red LaMotte... said... "the guys at Remington Rand have one of those UNIVAC machines at the Census Bureau now, and soon they'll have another... They've shoved a couple of our tabulators off to the side to make room". I thought, "My God, here we are trying to build Defense Calculators, while UNIVAC is smart enough to start taking all the civilian business away!" I was terrified... We decided to turn this into a major push to counter UNIVAC

So we went from:

This next step is when the unexpected happened.

but the real heroes here, the people that changed the world forever, are the eight geniuses who founded Fairchild Semiconductor in Palo Alto, California, in 1957.

They were part of a crack team hired and moved near Stanford University by William Shockley, when he started his own silicon transistor design firm in 1956.

Shockley, whose AT&T Bell labs research team had invented the transistor (1947, made with Germanium), had again hired the crème de la crème to join him in a new venture to design and manufacture Silicon transistors (invented also at Bell labs in 1954 after Shockley was gone).

But this time, he had added the need for a winning attitude to the academic excellence requested of his candidates.

Shockley said he would put together "the most creative team in the world for developing and producing transistors and other semiconductor devices"... His ability to choose the best scientists in the field was unmatched.

The only thing is that he was such a bad manager that eight of them quit as one[1] by 1957. So they had spent about eighteen months learning from the most talented semiconductor designer of the time and now, the best in the world chose the very best amongst themselves to leave and to start their own company.

Kleiner was one of the group of eight

When at first they decided to leave, the group of eight began casting about for another employer to hire them all. They had enjoyed working together and wanted to continue. But through Kleiner's father they got in touch with the East Coast investment banking firm of Hayden Stone, which suggested an alternative. "you really don't want to find a company to work for," its representatives told the group. "you want to set up your own company, and we will find you support."

Very quickly our eight wonders found financing from Sherman Fairchild, a major IBM shareholder, whose father had been the first chairman of IBM from its creation in 1911 to 1924, when it was still doing business as C-T-R.[2]

"The chairman of the board of the new company [CTR] was George Fairchild" & "Appendix E: George Fairchild: chairman 1911 July to 1924 December"

It is therefore not a surprise that IBM bought the first 100 silicon transistors they ever made, giving them instant credibility but, in return, it started IBM's slow decline from its position of untouchable computer industry top dog.

This is because Silicon Valley slowly, progressively, unrelentlessly lowered and eventually destroyed the previously impassable barrier of entry into the computer business.

Thanks to a rapid-fire stream of innovations and inventions, Fairchild Semiconductor launched Silicon Valley, the place that would eventually put a computer in everybody's hands or pockets.

In the late 1970s, the American Electronics Association published a genealogy of Silicon Valley. The table showed that virtually every company in the valley could show a line leading directly to someone who worked at and eventually left Fairchild Semiconductor.

The fundamental design of all that mattered then and now was invented and manufactured by the group of eight that started Fairchild Semiconductor in Palo Alto, CA.

First and foremost Jean Hoerni invented the planar process in 1959, taking a very hard to manufacture, somewhat 3-dimensional silicon transistor, and replacing it by a 2-dimensional one that can be "developed" like a picture (the process is called photolithography).

When challenged by their patent attorney to come up with an application for the planar process, Bob Noyce invented the planar Integrated Circuit (IC) the same year (1959). A planar IC is composed of a bunch of planar transistors, their supporting electronics and their connections, "developed" on one flat piece of silicon. Because of that, the planar IC is also referred to as a monolithic-IC.

Bob Noyce is the sole inventor named on the planar IC patent and we'll see that he deserves a lot of credit, but the first IC was a group effort from all of the original founders. Also, their top notch reputation put them on a fast track to success since it allowed Fairchild Semiconductor...

to attract so many of the nation's top minds and talents in such a short time.

Jay Last led the team that spent the next year and a half making Noyce's IC a reality. They succeeded at building the first ever "manufacturable planar IC" by the summer of 1960.

The results speak for themselves; sixty years later, in 2018, 1 trillion semiconductor devices, based on that technology, were sold worldwide for a grand total of 469 billion dollars.

Isaac Asimov, the great science and science-fiction writer, is said to have described the invention of the IC as "the most important moment since man emerged as a life form." Strong words, to be sure, but not easily proven wrong given all that has resulted from that innovation. Or rather two innovations, because the IC's invention is inextricably bound up with the creation of the planar process for semiconductor fabrication.

|

Note: Contrary to popular belief, and despite receiving a Nobel price that he should have never been awarded,[1] Jack Kilby is not the co-inventor of the Integrated Circuit. In his 523 pages book dedicated to proving this fact, Saxena wrote: The facts also corroborate this statement: The only kind of ICs sold from the very beginning have been the monolithic-ICs, commonly termed as just the ICs, made from Si.

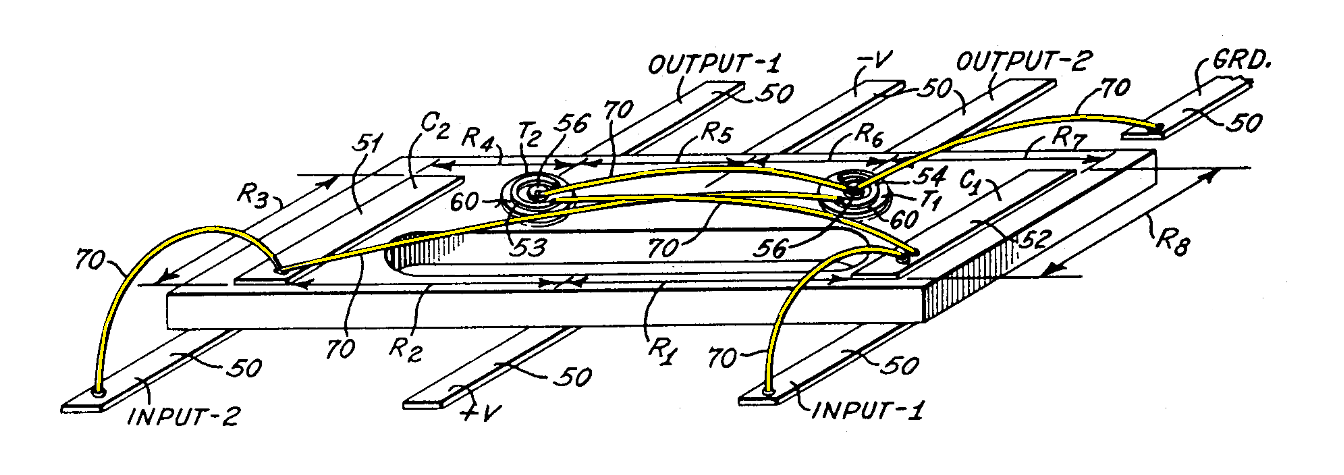

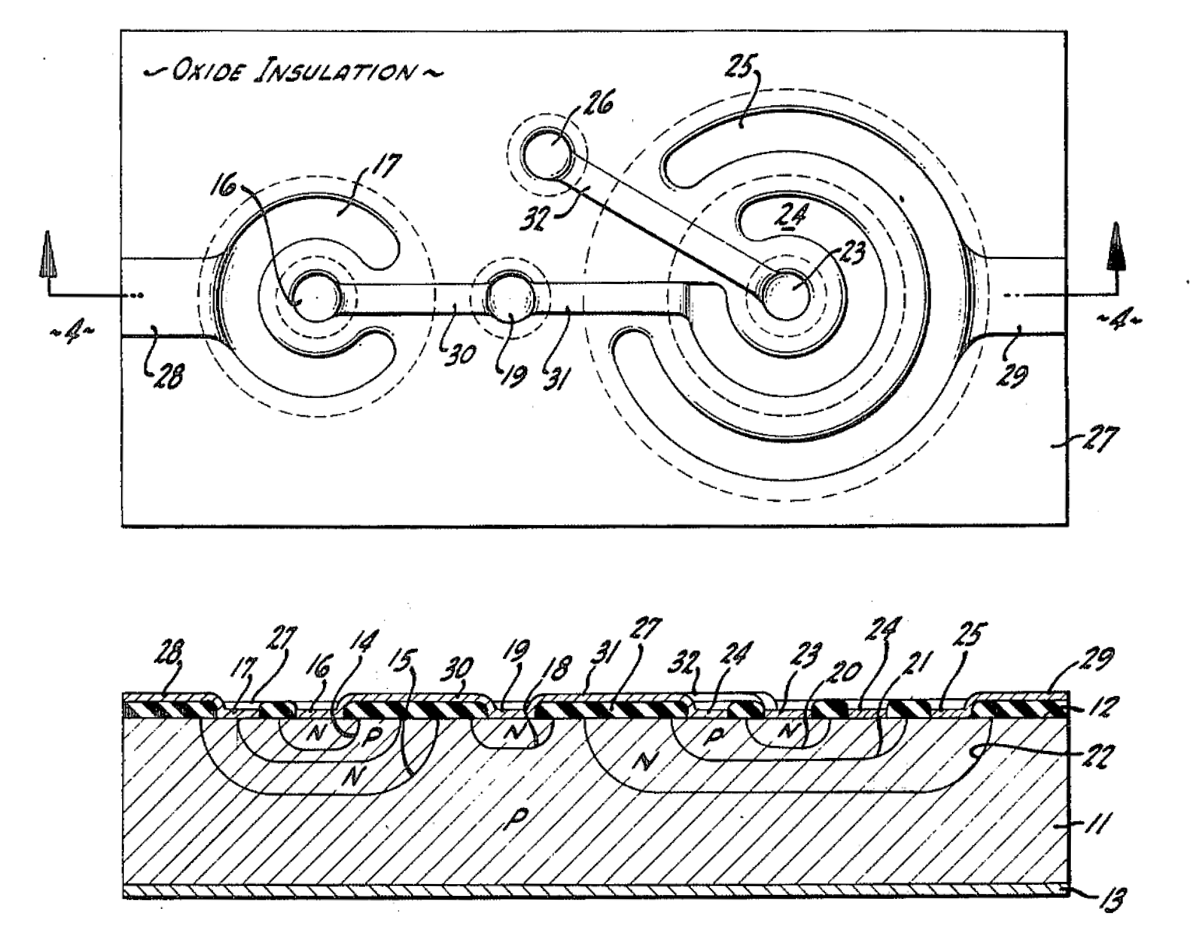

From Kilby's 1959 patent: Often called the flying wire picture, this kind of IC was impossible to mass-produce and never went beyond the prototype phase

From Noyce's 1959 patent: The planar silicon IC, with absolutely no wire. It started the "Xeroxed" IC computer revolution, from one to many. Just make one mask and "develop" as many as you want. Kilby's patent described a Germanium based design where the various components were connected by wires. The complexity, limited upside potential and cost to manufacture killed its chances of ever being a useful invention (especially compared to Hoerni/Noyce's method of "Xeroxing" flat Silicon ICs). This is why Silicon Valley is in California and not in Texas. |

The man responsible for having Silicon Valley growing out of Palo Alto in Northern California was Frederick Terman who became dean of the School of Engineering of Stanford University after WWII.

As such he provided the next leg of our story with on one hand, the creation of their industrial park, a 700 acres park, designed to welcome high tech companies and where their students and faculty could interact with the real world and vice versa with HP, GE, Rockwell and so many more Top Notch companies moving there on and after 1951, and on the other hand, his intense lobbying to bring projects to Palo Alto.

His greatest success happened in 1956, when he convinced William Shockley to move near Stanford university to start his silicon transistor company, even though Shockley was being financed by Arnold Beckman a Caltech professor, whose company Beckman Instruments was based more than 300 miles away, in Pasadena, near Caltech.

So, Terman lobbied Shockley to move near him, Shockley focused on technical excellence and winning attitude to hire the greatest team in the world to develop silicon transistors, eight of them started Fairchild Semiconductor in Palo Alto in 1957, where they invented and developed the planar IC in 1959, the rest is the story of Silicon Valley.

Fairchild Semiconductor and its co-founders are the absolute root of Silicon Valley, but they couldn't have done it alone.

The State of California and the foresight of its shapers provided the perfect environment for this startup nursery.

First, California provided for a labor fluidity that is still fairly unique in the world:

California law forbade any labor contract that limited what an employee could do after quitting... in the rest of the US, trade secrets were guarded jealously and employees were forbidden to join competitors.

And of course, the primary beneficiary of this law, in this brand new semiconductor field, were the traitorous eight, as Shockley used to call them. On the East coast none of them would have been able to work in the industry for years, but California was different, they could work in the same field a second after quitting.

Patents were still used and enforced, but still, this labor law allowed for a rapid exchange of knowledge and expertise which fueled the growth of Silicon Valley. The magnificent eight were first, but they had showed the way for anyone else to follow. In fact in January 1961, half of the founders of Fairchild left to start their own company (Amelco). All the spin-offs created by former employees of Fairchild became known as fairchildren.

Also, Fairchild Semiconductor had spawned such a creative environment that, all of the sudden, it became important to be part of this unique ecosystem, just to be kept in the loop. And so, all of the East Coast electronics mega-corporations started to open offices there, bringing with them and sharing their own expertise as well.

On top of that California invested in a top notch system of UC public colleges that provided a local, highly educated, work force.

The aggressive founding for research of the highest quality by private universities like Stanford University and Caltech brought prestige and knowledge to the state.

Caltech... drew—raided—faculty from places like Harvard and MIT by promising researchers their own labs, a free hand to run the research they wanted, and all the money they needed, an irresistible combination... consequently... [they] attracted not only some of the brightest researchers, but the most iconoclastic, giving the school an edge of excitement, an atmosphere tinged with adrenaline.

And of course the life blood of startups: Venture capital firms

The first Venture capital firms appeared in the US in 1946, both on the East Coast and in San Francisco.[1] They would provide the fuel for the explosion of activity in Silicon Valley.

These venture capital firms grew tremendously from the fact that,

spurred by the cold war...the US enacted... the Small Business Investment Company Act of 1958. The government pledged to invest three dollars for every dollar that a financial institution would invest in a startup (up to a limit). In the next ten years, this program would provide the vast majority of all venture funding in the US. The Bay area was a major beneficiary.

On top of that, during the 1970s, with a top income tax bracket of at least 70%, the government decided to facilitate private investments in the capital market.

First, Congress created two retirement vehicles, the IRA (1974) and 401k (1978) that allowed tax payers to invest in the stock market directly with tax deferred money (up to a limit). They also reduced the capital gains tax rate from 49.5% to 28% (1978), and finally they allowed for pension funds to engage in high-risk investment (1979).[2]

All of this channeled a tremendous amount of money into Silicon Valley startups.

As a result, the greatest creation of wealth in the history of the planet took place there.[3]

This is in the tittle of the book

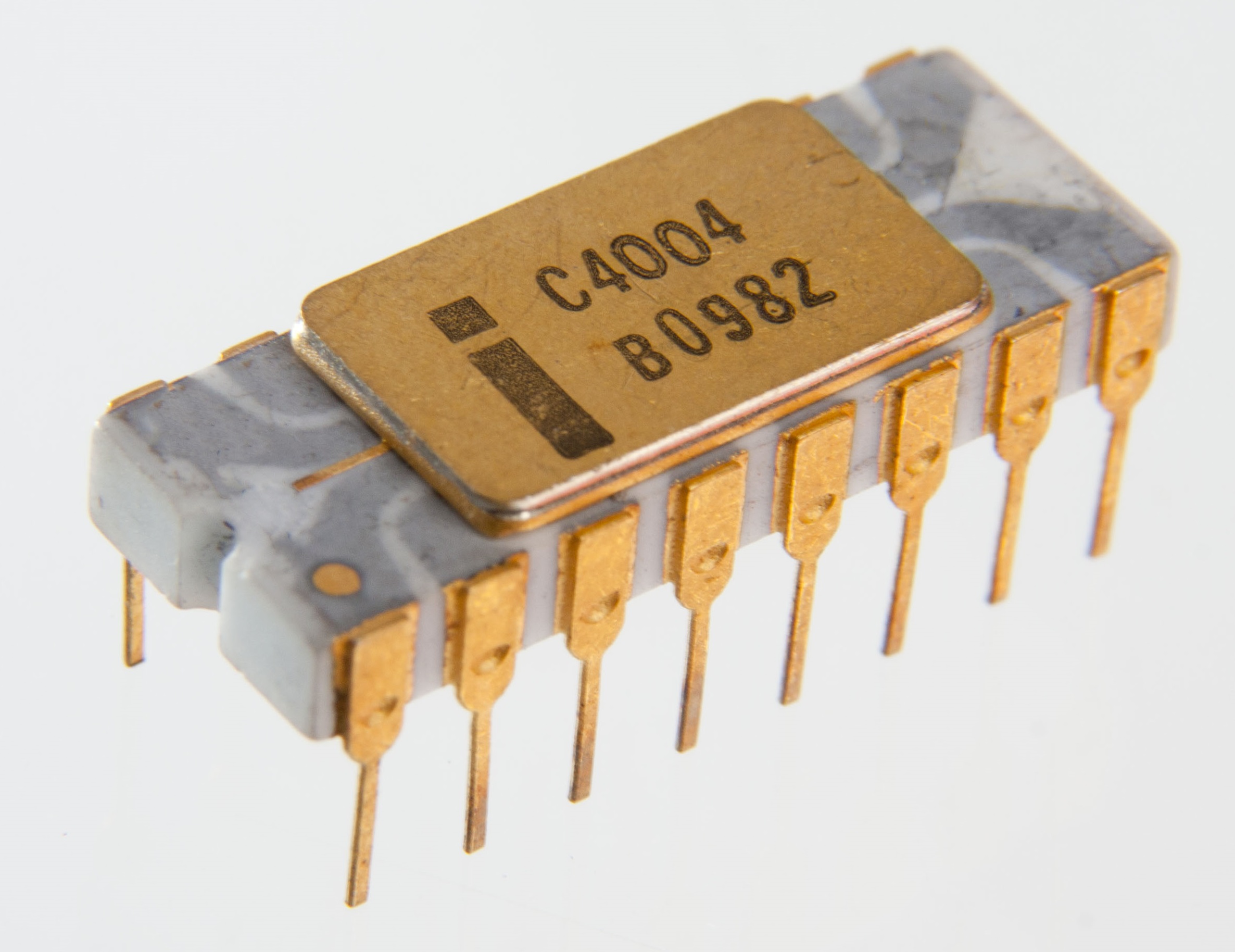

Intel 1971: a computer system in 4 chips - © info

Bob Noyce and Gordon Moore, two of the eight geniuses that started Fairchild Semiconductor, left in 1968 to start Intel Corporation where they integrated all of the fundamental building blocks of a computer into microchips.

Their first hire was Andy Grove, who had worked with them at Fairchild. Together these three became known as the Intel Trinity

It was the most perfect match of complementary personalities ever put together.[1] And thanks to their reputation, they were able to do what Shockley had done eleven years earlier: hiring only the créme de la créme to help them build and design semiconductor devices. The result was spectacular.

Robert Noyce, the most respected high-tech figure of his generation, brought credibility (and money) to the company's founding; Gordon Moore made Intel the world's technological leader; and Andy Grove relentlessly drove the company to ever-higher levels of success

Thanks to Fairchild semiconductor and Intel Corporation (and so many more) Silicon Valley shrunk the design, the cost, the complexity of the computer down to something the size of a human nail.

Minicomputers, PCs, Cellular Phones, tablets, google glasses... followed!

With the advent of the microprocessor, the computer field split in half and evolved into two separate directions:

Computer systems use a lot of embedded systems as peripherals, like hard drives, internet routers, keyboards, webcams, printers, scanners...

Each one of these peripherals is an embedded system powered by at least one microprocessor.

Back in 2003, a typical car used even more embedded systems:

The current 7-Series BMW and S-class Mercedes boast about 100 processors apiece. A relatively low-profile Volvo still has 50 to 60 baby processors on board. Even a boring low-cost econobox has a few dozen different microprocessors in it. Your transportation appliance probably has more chips than your Internet appliance.

The Internet is at the root of our 21st century computer-centric information revolution.

As we have seen earlier, the first computers to be linked into a wide area network were part of SAGE, a unique, multi-billion dollar set of 27 military computer centers, scattered all over the USA, and operated from the mid-1950s by NORAD as a part of the air defense system, but they all depended on AT&T's telephone wires that, if cut, would isolate one or many computers.

Internet traffic in the US in 1991 - © info

ARPANET, which was later generalized into the Internet, was a network designed to prevent the accidental isolation of any computer no matter the circumstances.

It was the first decentralized network based on packet switching and demand access.

When you download a picture, a song, a video... its content is first cut into little packets which are sent individually or in small groups to your local router. From there, they hop independently from router to router, always asking around for the fastest possible route, until they reach their destination where they are reassembled.

Leonard Kleinrock brought the first Arpanet node to life at UCLA in September of 1969.

Stanford University, UC Santa Barbara and the University of Utah joined in, in the following three months. And so, by the end of 1969, the backbone of Arpanet consisted of four operational routers.

In the mid-1970s Bob Kahn and Vint Cerf created a set of universal network interchange tools that launched the internet (TCP/IP). At first internet sites were linked into Arpanet, but because of the flexibility of Internet, Arpanet was quickly replaced and by 1990 it was shutdown.

Ted Nelson had invented Hypertext (the concept and the name) in the early 1960s. Hypertext is the name of those links that you click on to go for more information at a different place in your current page or on a different web page altogether.

He tells us:

I first published the term "hypertext" in 1965... By hypertext I mean non-sequential writing. Ordinary writing is sequential for two reasons. First, it grew out of speech and speech-making, which have to be sequential, and second, because books are not convenient to read except in a sequence. But the structures of ideas are not sequential...

Twenty years later, in 1989, Tim Berners-Lee of CERN added a way to create hypertext links to any willing computer present on the internet. By doing so, he created the World Wide Webwhich can be described as an information space existing right on top of the internet. You are currently using a web browser on your computer that received this computer history web page from my web server.

They are currently more than 3 billion people using the World Wide Web .

In 2015, 5 quintillion bytes of data (that's 5 1018, or 5,000,000,000,000,000,000 bytes of data) traveled through the internet.[1] To get an idea of scale this is the distance from the Earth to Alpha Centauri, our closest star system, expressed in centimeters!

This is also the amount of data that was created that year on all of the world computers every two days.[1]

Intel's 1st microprocessor

© Steve Behr 2012

The Personal Computer came from the increase in power and complexity of monolithic ICs.

In its first three years of existence, Intel created all of the specific ICs needed by a hobbyist to assemble a very simple computer system in a garage (1968-1971).

Building a PC became as simple as playing with a Lego set, take a microprocessor chip, a few memory chips (permanent and dynamic), a few I/O chips, wire these guys together and you're in business.

Microprocessors evolved rapidly, becoming more and more performant, functioning at higher speed and capable of handling more data with each new generation.

This allowed Ed Roberts of MITS to introduce the first true PC, the Altair 8800, in January 1975. A few other PCs had been introduced earlier, with or without a microprocessor inside, but this one had a design that inflamed the passions of computer hobbyists.

Ed Roberts would create the entire PC industry before the end of the year.

Ed Roberts: ...we created an industry and I think that goes completely unnoticed. I mean there was nothing, every aspect the industry, whether you're talking about software, hardware, application stuff, dealership... you name it... was done at MITS.

The main reason for claiming this title of first PC, comes from the fact that it was the first one based on Intel's 5th microprocessor, which was finally powerful enough to do some real work, but also because its user interface, composed of LEDs and switches, was a copy of the Data General Nova 2 minicomputer and, just like the Nova 2, it had a bunch of empty slots inside, ready to accept many different kind of expansion cards.

In a later interview Ed Roberts recalled:

We had a Nova 2 by Data General in the office... The front panel on an Altair essentially models every switch that was on the Nova 2. We had that machine to look at... These guys had already figured it out

One of the very first Atlair 8800 built at MITS with its original 256 Bytes of memory

© Sarah Robinson 2012

So the Altair looked like a minicomputer, and functioned like a minicomputer, but it sold for $439 as a kit or $621 if you'd rather buy it fully assembled. There was no comparison with the $6,000 that you had to pay for a bare bone Nova 2.

The Altair also had an open-source expansion bus (later renamed S-100), so a myriad of third party developers jumped on the bandwagon to develop all kind of S-100 expansion cards, like memory cards, tape drive controllers... This entire industry was born that year.

The first Altair clone, the IMSAI 8080, was released nine months after the introduction of the Altair, in October 1975.

It wasn't a wild fire, it was a thermonuclear explosion!

Ed Roberts mentioned in an interview that, just one year after the introduction of the Altair 8800,

around January 1976,

IBM came up with some figures that showed MITS and our Altair to be increasing the supply for computers in the world by 1% each month

As we just saw, the Altair 8800 was the first microprocessor PC with Intel's fifth microprocessor inside. It looked like a Nova 2 minicomputer but was 10% of its price. Everybody wanted one and could afford one.

Which brings us to the first two PC giants of the late 20th century.

Bill Gates and Paul Allen (co-founders of Microsoft), Steve Jobs and Steve Wozniak (co-founders of Apple) all got started in the PC industry because of the release of the ALTAIR 8800.

March 1975 is a key month for the genesis of these two mega corporations:

Steve Wozniak recalls:

I can tell you almost to the day when the computer revolution as I see it started, the revolution that today has changed the lives of everyone.

It happened at the very first meeting of a strange, geeky group of people called the Homebrew Computer Club

The first PC user convention, the World Altair Computer Convention (WACC) happened in Albuquerque, New Mexico, on March 26-28, 1976.

Bill Gates opened the convention criticizing the piracy of their Basic software by computer hobbyist, while a few months later the Apple I was released with free Apple Basic!

Microsoft charged for software, Apple gave it away for free, two opposite philosophies that have continued to this day. Anyway, the computer industry developed so quickly thereafter that there would never be a second WACC.

Many competitors rushed in to beat the Altair. Apple entered the fray in April 1976 with its release of the Apple I which was quickly followed by the Apple II released in June 1977. They eventually emerged as the leader of the PC industry in the late 1970s, just to be replaced by IBM a few years after they released their own PC (1981). IBM got side-stepped by clone makers a few years after Compaq released the first IBM PC clone (1982).

The closed architecture of Apple's designs doomed them and brought about a Wintel (Windows-Intel) monopoly of the IBM PC and its clones that lasted for almost 30 years. It would end after the release of smaller devices like tablets and smartphones.

The first computer (ASCC/Harvard Mark I) was developed by IBM, a private corporation. IBM was followed by the University of Pennsylvania which developped the next two (ENIAC & EDVAC).

The US military had financed these three projects[1] while fighting WWII.

Except for the ASCC—75%—, all of the first computers were entirely paid for by the US military (1944 ASCC: fission bomb, 1945/6 ENIAC: fusion bomb, theoretical EDVAC, AT&T's Bell Labs model V, Binac...)

At the end of the war, the military leveled the plain field by open-sourcing these three computer designs, but especially EDVAC—the theoretical electronic stored-program computer—during the Moore School Lectures with 28 students from academia, the military, the government and American corporations.

It took Silicon Valley (private companies) with its refinement of silicon transistor devices from 1959 (planar process, silicon ICs and microprocessors), for the computer to shrink in size and to move to ever smaller work places and budgets.

But, the driving force that changed our society forever, the internet, which currently links billions of our Babbage machines together, was a one-two punch from the military and politicians:

In the fall of 1990, there were just 313,000 computers on the Internet; by 1996, there were close to 10 million. The networking idea became politicized during the 1992 Clinton-Gore election campaign, where the rhetoric of the information highway captured the public imagination. On taking office in 1993, the new administration set in place a range of government initiatives for a National Information Infrastructure aimed at ensuring that all American citizens ultimately gain access to the new networks.

The US military didn't invent the computer, nor the internet, but it paid for all of their early developments, similarly, Al Gore didn't invent the internet but he was behind the political push that gave us what it is today.

Currently more than 3 billion people have access to the internet.

The cell phone infrastructure was conceptualized and later deployed by AT&T.

AT&T operated as a vertical monopoly, which was allowed by the government as long as they stayed in the telephone business. This lasted from 1913 (Kingsbury Commitment) to 1984 when it was effectively broken up into seven pieces as a result of an antitrust lawsuit.

Dr. Martin Cooper with his 1973 Cell Phone prototype - © info

This tolerated monopoly meant that AT&T couldn't compete in any field that was not related to the telephone, so even though they invented the germanium transistor (1947) and the silicon transistor (1954), they gave away all research papers about them and they provided licenses for their use for almost nothing.

Fairchild Semiconductor profited greatly of that.

AT&T's Bell Labs, had some research centers in New Jersey where they invented and implemented a great number of communication tools. Fiber-optics, lasers, communication satellites and the Cellular phone concept all came from these NJ research labs.

The concept of the Cell Phone was first proposed there in 1947 but it took the invention of the microprocessor, this computer on a chip, that was required to manage the electronics of a portable phone, for Marty Cooper to develop the first cell phone prototype at Motorola in 1973 and to integrate it in a network of AT&T cell phone towers within the next decade.

Motorola launched the first cell phone, that was nicknamed the brick for its size and shape in 1983.

A cell phone tower manages communications within its cell, which could be defined as the space within which it provides the highest possible communication quality. Cell phone towers overlap.

As a traveler moves from one tower cell to the next, his phone always keeps in contact with the tower that provides the highest quality signal.

The only thing needed for the public to embrace the internet was the creation of its global corporations.

Jeff Bezos was first out of the gate with the creation of Amazon in 1994. It started as an internet bookstore and quickly evolved into the first truly global E-commerce business.

Larry Page and Sergey Brin founded Google in 1998. Larry Page got the idea in 1997 after hearing a presentation about Hyper Search at an internet conference in Santa Clara, California by Massimo Marchiori an Italian computer-science professor. Google quickly grew into the top computer search engine. It now has a broad range of diversified internet services.

Mark Zuckerberg started Facebook in 2004. With more that 2 billion active users it is the biggest social network in the world.

Neither of these three companies were first in their field, but each one conquered the world thanks to the drive and the ingenuity of their founders.

And of course, Steve Jobs gave us the iPhone in 2007 allowing for anybody, from the youngest to the oldest of us, to access these services without any computer education. Today, we all carry a smartphone, this portable internet Babbage machine (usually stored in our back pockets) without knowing that it all started in 1834!

In 1979, Japan's Ministry of International Trade and Industry setup a committee to explore what the next generation of computers should be. They came up with a report—a wish list—in 1981 that defined what we now call the fifth generation of computers (we look at these generations further down this page).

The main characteristic of a fifth generation computer was to make use of massive parallel processing architectures built around microprocessors.

Another goal was to provide for artificial intelligence.[1]

The first true realization of this goal happened in 1997 when the world's top chess player, Garry Kasparov, lost against a one ton IBM supercomputer named Deep Blue.

Kasparov's mind was, without question, the ultimate expression of human strategy and intuition and yet he couldn't beat this pile of doped crystallized silicon powered by electricity.

Watson, another one of IBM's supercomputers, beat two of Jeopardy!'s super-champions in 2011, therefore winning in a general knowledge game.

Another casualty was Ke Jie, the world No.1 ranked human player of the board game Go who lost against Google DeepMind's AlphaGo in May of 2017, a bit more than a year ago.

And since Go is the most complex board game there is, we are done! Humanity is officially playing second fiddle to the computer.

A great demonstration of how far we have gone happened in June of 2018 when a team of 5 different computer AI algorythms built by openAI, defeated a team of 5 humans at Dota 2, a popular strategy computer game.

Our current generation of computers is just a few years away from driving our cars and flying our planes. Some are already fighting our wars, under the supervision of human soldiers safely located thousands of miles away while others are bringing space rockets back to Earth autonomously.

It doesn't take much imagination to see, in the very near future, entire branches of our society totally operated without human help.

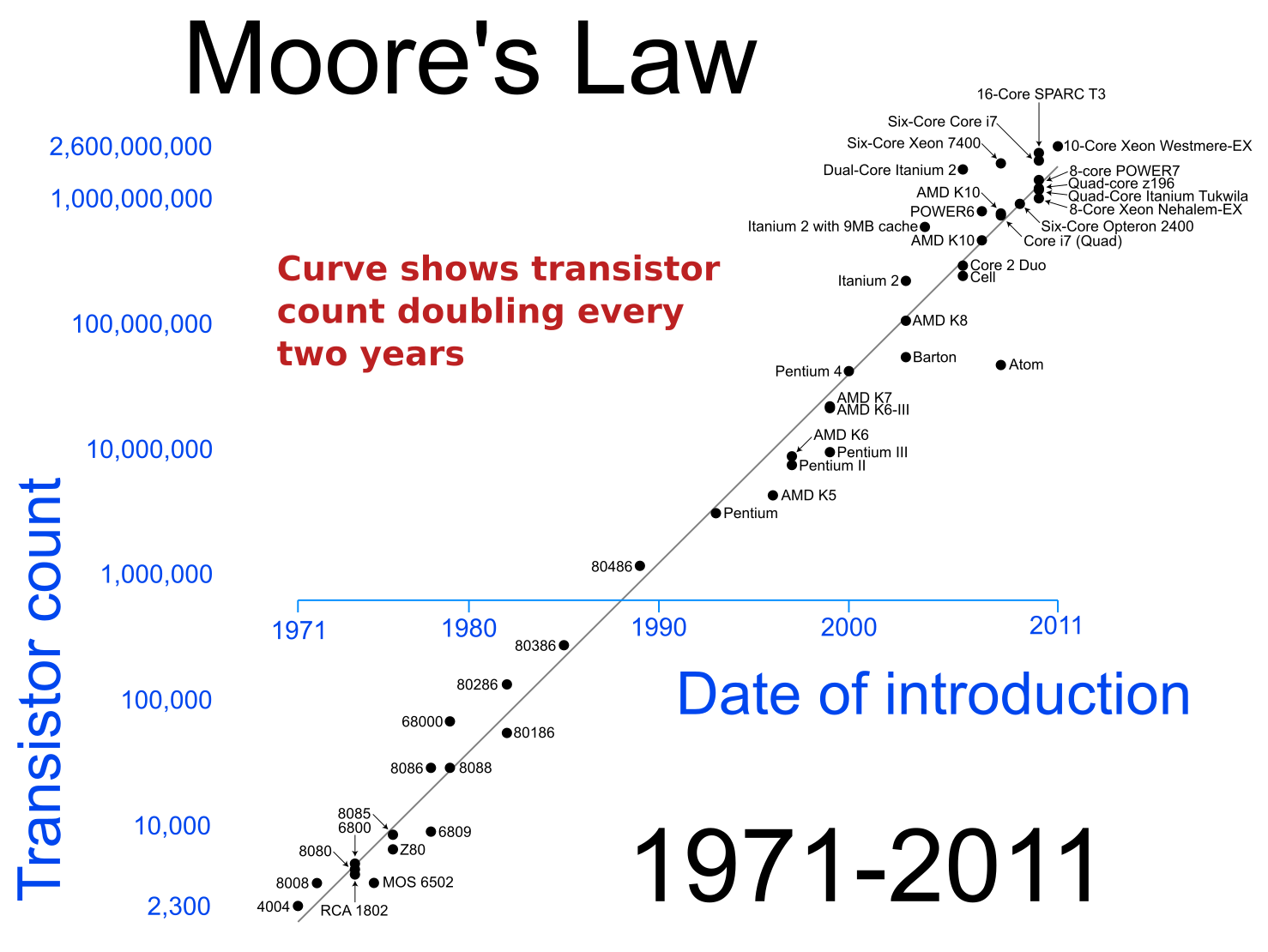

Moore's law for microprocessors - © info

Since the invention of the planar IC, we only have improved on the number of transistors they contain, going from just 2 transistors, to billions, allowing, on the way, for the creation of specific devices like

but nothing as fundamental as the invention of the planar/monolithic IC has happened since.

Some recent developments, where transistors are built in 3D (this time on purpose), should allow for up to thirty billion transistors on one chip, but this will not affect the average person like the planar IC has, at least not yet.

In 1965, six years after the invention of the planar IC, Gordon Moore predicted that the best value for the number of transistors laid on a single piece of silicon would double every year. The best value/integration ratio then was 30 transistors per IC.

He revised his prediction in 1975 to doubling every two years which still holds true today, this is called Moore's law.[1,2]

There were three separate genesis for Silicon Valley

1959-1964 Planar Process

This first genesis started with the invention of the planar process, where silicon transistors could literally be printed (photolithography) on a piece of silicon, which made transistors mass-producible and more reliable.

Because of that, Silicon Valley slowly became the development center for silicon semiconductor devices, winning over from all of the other places like the East Coast (Boston, New York, New Jersey) and Texas.

During this entire period the planar silicon integrated circuit (IC), which had been invented by Bob Noyce there in 1959, was too expensive to be successfully commercialized.

1964-1969 Planar Integrated Circuit

in the spring of 1964, Noyce made a little-discussed but absolutely critical decision. Fairchild would sell its low-end flip-flop integrated circuits for less than it would cost a customer to buy the individual components and connect them himself and less that it was costing Fairchild to build the device.

Noyce's gamble paid off spectacularly; the IC went mainstream within a year. In turn, it lowered production costs to the point where instead of losing money, they made more that they could have anticipated in their wildest dreams.

Just to get an idea of scale, in January 1964, ICs were only bought for a few military projects, especially the minuteman missile program, but there was no real production to speak off. Just two years later, in 1966, Burroughs placed an order with Fairchild Semiconductor for 20 million ICs!

Gordon Moore said that Noyce's decision to market ICs below cost was

as important an invention for the industry as the integrated circuit itself.

1969-2007 Silicon Babbage Machines

This third and last genesis started with the commercialization of the first semiconductor memories and was quickly followed by the commercialization of the first microprocessor. The world would never be the same again.

From that point on, the intelligence of a Babbage machine could be incorporated in any kind of electronic equipment.

A toaster, a fridge could offer options thought impossible before. It also brought simplicity and speed to the design of the machines that surrounded us.

This is when Silicon Valley turned white-hot. New products, ideas, developments happened every few months. Everybody had to be there so as not to be left behind.

This period ended with the invention of the smartphone by Apple in 2007.

The release of the iPhone in 2007 coincided with a turning point in Silicon Valley where the technology had reached such a high point that nothing more complicated was needed to invent new products.

Today you can develop anything you want by simply using what is already there. Which means that you no longer have to be in the Valley to innovate.

New computer systems and slight improvements in technologies are now being developed all around the world. Silicon Valley is being copied everywhere and the next computer revolution is coming from many different places at once.

For instance, back in 2013, China released Tianhe-2, the world fastest supercomputer, which had Intel microprocessors inside and could perform at 33 PFLOPS. They toped themselves in 2016 with Sunway TaihuLight that had Chinese designed microprocessors inside and clocked at 93 PFLOPS.

We just got back in the lead with the 2018 release of the IBM Summit which can go up to 200 PFLOPS[1] but the race is not over yet.

Apple developed the modern smartphone in Silicon Valley in 2007 (100% market share) and yet, eleven years later, during the second quarter of 2018, it only ranked third with just 12.1% worldwide market share, behind Huawei of China (15.8%) and Samsung of South Korea (20.9)%.[2]

Route 128, around Boston, which was called America's technology Highway in the 1950s is making a strong comeback.

Los Angeles' Silicon Beach has more than 500 tech startup companies (and the usual ones), scooping brains from nearby Caltech, UCLA, UC San Diego, USC... SpaceX is developing our next generation of reusable space rockets from there.

Google DeepMind, which supercomputer defeated the brightest of us, was designed in the UK.

Samsung, the South Korean multinational conglomerate, passed Intel as the world's largest manufacturer of semiconductors in 2017.[3] They also co-developed with IBM and GlobalFoundries a technology to put 30 billion transistors into a chip.

And so on and so forth...

A page has turned, but boy, Silicon Valley had a good fifty year run!

| Major Milestones |

|---|

| Charles Babbage invented the computer, Howard Aiken spurred IBM into building it |

| The US military sponsored Eckert & Mauchly's invention of the modern, stored-program computer at UPenn Once WWII was over, the military gave it away to the world, going open-source with the Moore School Lectures |

| MIT gave us the first real-time, time-sharing computer AT&T linked a bunch of them into the first wide area network and IBM gave them the first interactive user interface (screen & pointing device) |

| AT&T invented the silicon transistor. At Fairchild Semiconductor, Jean Hoerni made it flat and Bob Noyce gave us the planar Integrated Circuit (and Silicon Valley) |

| UCLA & Stanford University were the first backbone of Arpanet which evolved into the Internet Ted Nelson created hypertext Tim Berners-Lee of CERN bundled both into the World Wide Web |

| Intel gave us the microprocessor (Ted Hoff) and semiconductor memories, which allowed for portable devices of all kind |

| Ed Roberts from MITS designed the first true PC, the Altair 8800, as soon as microprocessors became powerful enough for the task, Apple took the lead shortly after, followed by the IBM PC and its clones |

| AT&T invented and implemented the Cell Phone infrastructure, Marty Cooper of Motorola built the first cell phones that used it |

| Silicon Valley kept on shrinking the size of computer parts, so much so that Steve Jobs was able to combine all of these previous innovations into the iPhone in 2007 |